Understanding the Essential Roles of CPUs, GPUs, NPUs, and TPUs in AI and ML

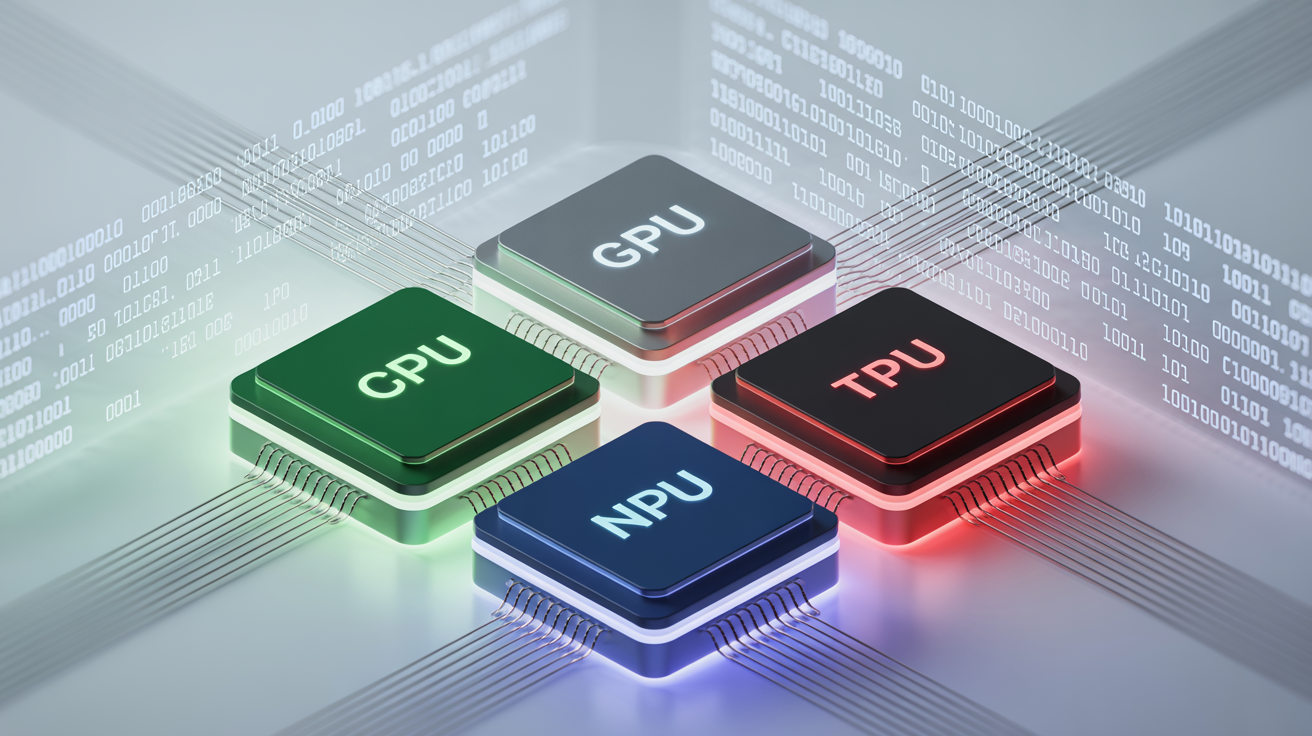

The rapid advancement of artificial intelligence (AI) and machine learning (ML) has led to the development of specialized hardware designed to enhance computational efficiency beyond the capabilities of traditional CPUs. Each processing unit—CPU, GPU, NPU, and TPU—plays a unique role within the AI ecosystem, tailored for specific models, applications, and environments.

Central Processing Unit (CPU): The Versatile Workhorse

Design & Strengths: CPUs are general-purpose processors characterized by a limited number of powerful cores. They excel in executing single-threaded tasks and running a variety of software, including operating systems and databases.

AI/ML Role: While CPUs can run any AI model, they fall short in providing the massive parallel processing capabilities required for efficient deep learning training or large-scale inference.

Best for:

- Classical machine learning algorithms (e.g., scikit-learn, XGBoost)

- Prototyping and model development

- Inference for smaller models or low-throughput requirements

Graphics Processing Unit (GPU): The Parallel Powerhouse

GPUs are designed to handle multiple operations simultaneously, making them ideal for training complex neural networks. Their architecture supports high parallelism, allowing them to process vast amounts of data in real-time.

Neural Processing Unit (NPU): The AI Specialist

NPUs are specifically designed to accelerate neural network tasks, providing optimized performance for AI workloads. They are best suited for environments requiring high efficiency and low power consumption.

Tensor Processing Unit (TPU): The Google Innovation

Developed by Google, TPUs are custom-built to enhance tensor processing, which is crucial for deep learning applications. They offer high throughput and efficiency, particularly in large-scale machine learning tasks.

This nuanced understanding of CPUs, GPUs, NPUs, and TPUs helps professionals make informed decisions about which hardware to leverage for their specific AI and ML needs. Each unit's design and capabilities cater to different aspects of model training and deployment, emphasizing the importance of selecting the right tool for the job.

Rocket Commentary

The article rightly highlights the distinct roles of CPUs, GPUs, NPUs, and TPUs in the AI landscape, emphasizing the need for specialized hardware to meet the demands of advanced AI applications. However, while these advancements promise enhanced efficiency, we must remain vigilant about the accessibility of such technologies. The rapid evolution of AI hardware should not exacerbate the digital divide; instead, it should empower businesses of all sizes to harness AI's transformative potential. As we navigate this complex ecosystem, prioritizing ethical considerations and fostering inclusive access to these specialized tools will be crucial for sustainable growth and innovation in the AI sector.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article