TransEvalnia: A New Era in Translation Evaluation with LLMs

Recent advancements in translation systems powered by large language models (LLMs) have led to remarkable capabilities, often surpassing human translators in various contexts. As LLMs evolve, particularly in complex translation tasks such as document-level or literary translations, the need for effective evaluation methods has become critical.

Traditional metrics, such as BLEU, have long been the standard in assessing machine translation quality. However, these methods are increasingly seen as inadequate. They fail to provide comprehensive insights into the nuances of translation quality, which is particularly important as translations approach human-level proficiency.

The Limitations of Traditional Metrics

While BLEU and similar metrics offer numerical scores, they do not explain the rationale behind these evaluations. This lack of transparency can make it difficult for users to understand the quality of translations fully. As a result, there is a growing demand for evaluation systems that go beyond simple numerical assessments.

Introducing TransEvalnia

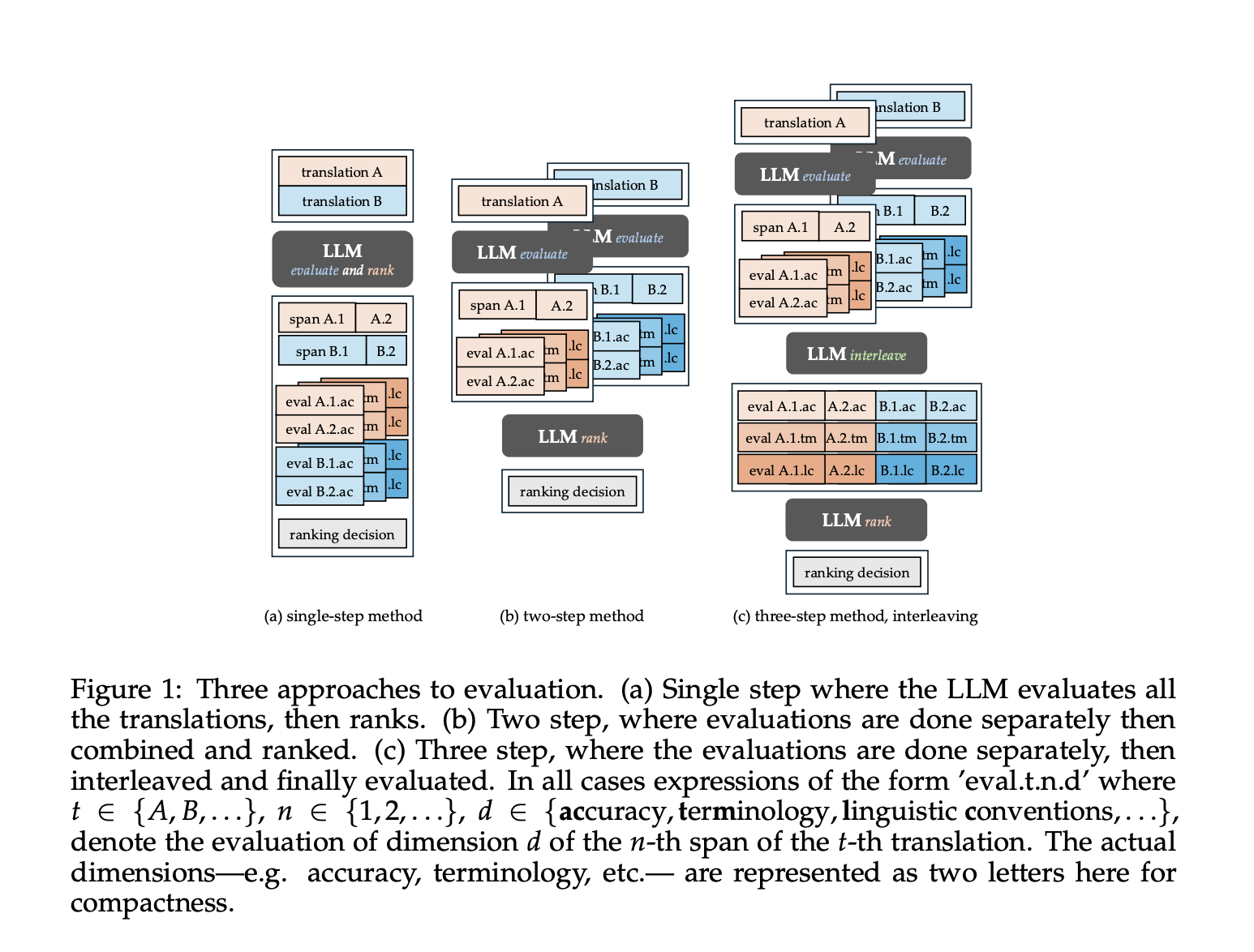

To address these challenges, researchers have developed TransEvalnia, a prompting-based system designed for fine-grained, human-aligned translation evaluation. This innovative approach allows for a more nuanced assessment of translations, focusing on key dimensions such as:

- Accuracy: Ensuring the translation conveys the original message accurately.

- Terminology: Maintaining consistency and appropriateness of specific terms.

- Audience Suitability: Tailoring translations to meet the expectations of different target audiences.

TransEvalnia's framework promotes transparency in evaluations, enabling users to not only assess the quality of translations but also identify potential errors. This deeper understanding empowers users to make informed decisions regarding translation outputs.

The Future of Evaluation Metrics

The efficacy of newer metrics, such as BLEURT, COMET, and MetricX, further highlights the shifting landscape of translation evaluation. These tools harness advanced language models to provide more refined assessments, catering to the evolving needs of users in an increasingly competitive translation environment.

As the capabilities of LLMs continue to expand, the translation industry is poised for significant transformations. Systems like TransEvalnia represent a crucial step towards achieving more accurate and user-friendly evaluation processes, ensuring that stakeholders can keep pace with advancements in technology.

Rocket Commentary

The article highlights a pivotal moment in the evolution of translation technology, showcasing the impressive capabilities of large language models (LLMs). However, the transition from traditional evaluation metrics like BLEU to more nuanced assessment methods is crucial. As LLMs approach human-level proficiency, the industry must prioritize developing comprehensive evaluation frameworks that capture the subtleties of language and context. This shift not only ensures higher quality translations but also enhances user trust and accessibility. Embracing ethical practices in AI development will ultimately transform communication across cultures, making technology a truly transformative force in global business and development.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article