Stanford Researchers Unveil ACE Framework to Enhance LLM Performance

A collaborative team from Stanford University, SambaNova Systems, and UC Berkeley has introduced the Agentic Context Engineering (ACE) framework, a revolutionary approach designed to improve the performance of large language models (LLMs). Unlike traditional methods that focus on fine-tuning model weights, ACE emphasizes the dynamic enhancement of input context.

The Innovative ACE Framework

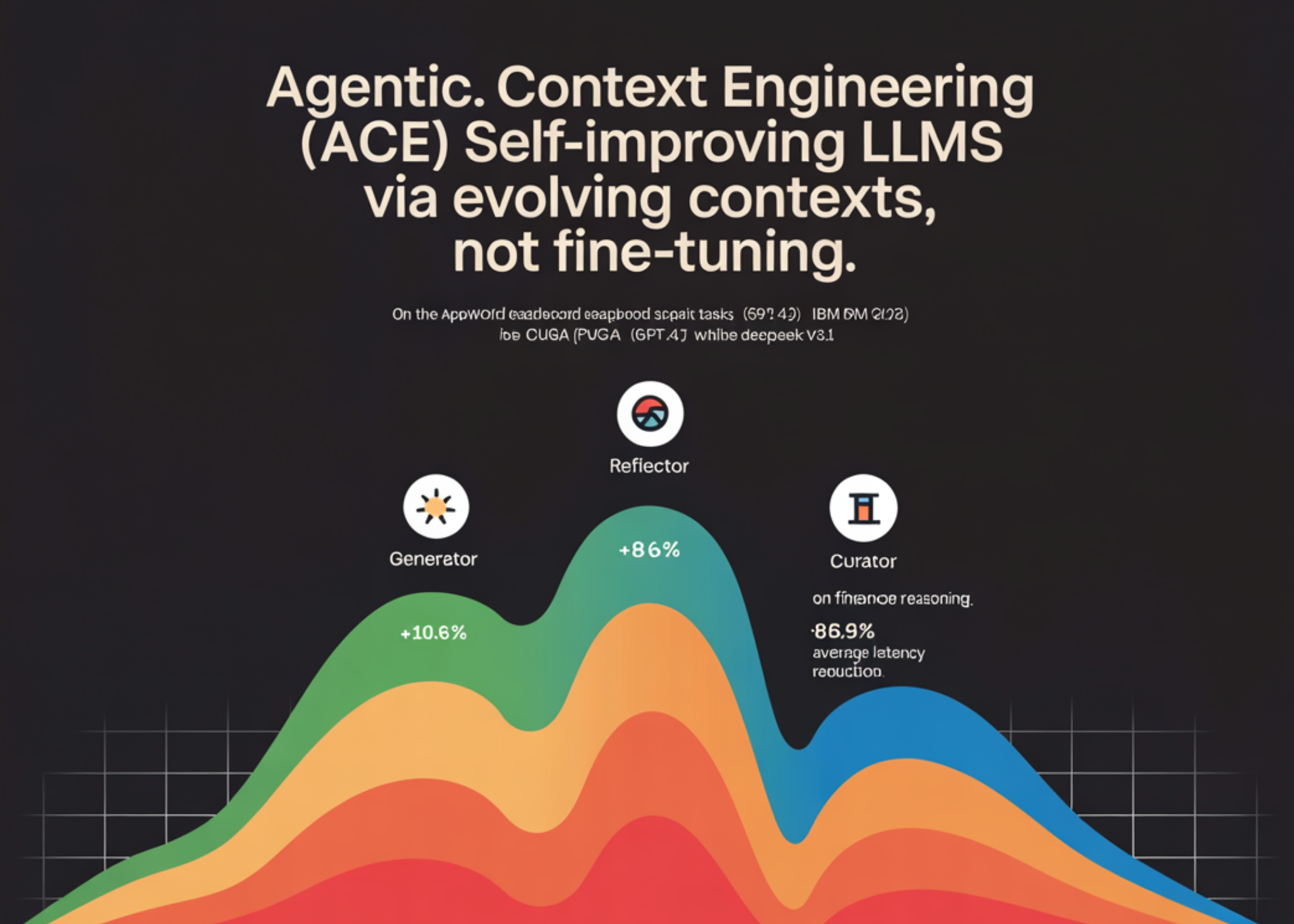

The ACE framework treats context as a living "playbook" that evolves over time. This innovative approach involves three key roles: Generator, Reflector, and Curator. These roles work together to incrementally merge small delta items, helping to mitigate issues such as brevity bias and context collapse.

Reported Gains and Efficiency

According to the team's findings, the ACE framework has demonstrated remarkable performance improvements across various tasks. Notable reported gains include:

- 10.6% increase in AppWorld agent tasks

- 8.6% improvement in finance reasoning tasks

- 86.9% average latency reduction compared to established context-adaptation baselines

In a recent snapshot of the AppWorld leaderboard as of September 20, 2025, the performance of ReAct+ACE was recorded at 59.4%, closely rivaling IBM CUGA, which achieved 60.3% using the GPT-4.1 model alongside DeepSeek-V3.1.

Shifting Paradigms in Context Engineering

ACE positions context engineering as a viable alternative to traditional parameter updates. Rather than condensing instructions into short prompts, ACE accumulates and organizes domain-specific strategies over time. The framework posits that a higher density of context significantly enhances agentic tasks—particularly those involving tools, multi-turn states, and various failure modes.

Conclusion

The introduction of the ACE framework marks a significant advancement in the field of artificial intelligence, providing a fresh perspective on how LLMs can be optimized for better efficiency and accuracy. This innovative approach could redefine best practices in the development and application of language models.

Rocket Commentary

The introduction of the ACE framework by Stanford, SambaNova Systems, and UC Berkeley represents a significant shift in how we approach large language models. By prioritizing context as a dynamic entity rather than a static input, ACE challenges the conventional narrative of model fine-tuning. This innovative perspective not only addresses persistent issues like brevity bias but also opens avenues for more nuanced interactions between AI and users. However, as we embrace this technological evolution, we must ensure that these advancements remain accessible and ethical, fostering an environment where AI can truly be transformative for businesses and society at large. The ACE framework illustrates that the future of AI lies not just in the models themselves but in how we engineer their environments for optimal performance.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article