Revolutionizing LLM Performance: The Mixture-of-Agents Architecture

The Mixture-of-Agents (MoA) architecture represents a significant advancement in the performance of large language models (LLMs), particularly in handling complex and open-ended tasks. Traditional single-model architectures often face challenges with accuracy, reasoning, and domain specificity. MoA addresses these limitations through a unique design that leverages multiple specialized agents.

How the Mixture-of-Agents Architecture Works

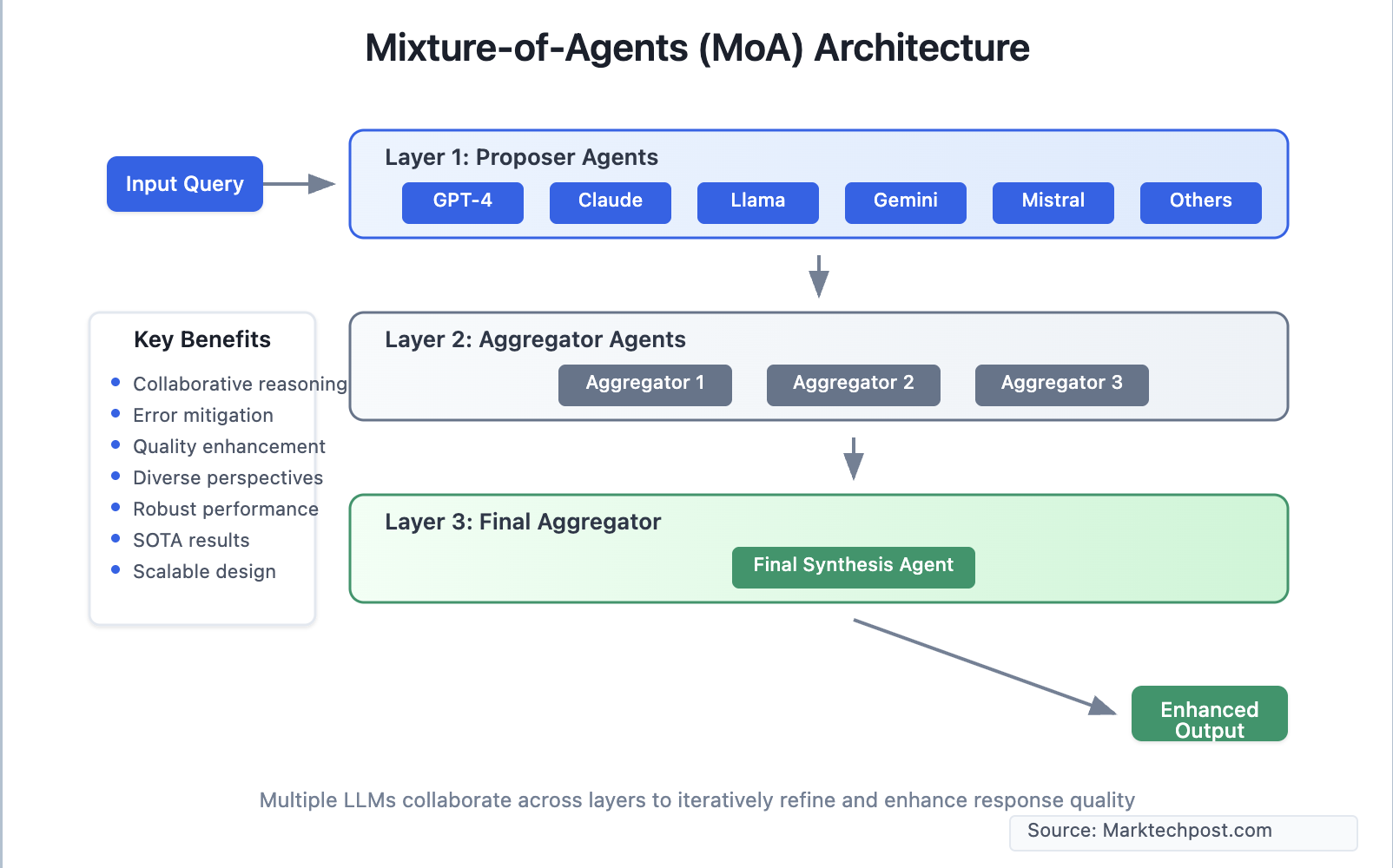

- Layered Structure: The MoA framework organizes multiple specialized LLM agents into layers. Each agent in a given layer receives outputs from agents in the previous layer, enriching its context and enhancing the quality of its response.

- Agent Specialization: Agents within the MoA system can be customized for specific domains such as law, medicine, finance, and coding. This specialization allows each agent to contribute unique insights, akin to a team of experts.

- Collaborative Information Synthesis: The process begins with a prompt distributed among proposer agents, each generating potential answers. These outputs are aggregated and refined by subsequent layers, culminating in a comprehensive and high-quality result.

- Continuous Refinement: Responses are iteratively improved as they pass through multiple layers, enhancing reasoning depth, consistency, and accuracy—similar to expert panels that review and refine proposals.

Why Is MoA Superior to Single-Model LLMs?

MoA systems exhibit higher performance levels compared to conventional single-model LLMs due to their ability to engage specialized agents that focus on specific tasks. This leads to improved accuracy and reasoning capabilities, making MoA a promising direction for future developments in artificial intelligence.

As the field of AI continues to evolve, the introduction of architectures like MoA marks a pivotal moment in the quest for more effective and reliable LLMs, driving advancements that could transform various industries reliant on complex language processing.

Rocket Commentary

The Mixture-of-Agents (MoA) architecture marks a promising evolution in the landscape of large language models, particularly by enhancing their ability to tackle intricate, domain-specific tasks. However, while the concept of specialized agents presents an exciting opportunity for improved accuracy and contextuality, it poses significant challenges regarding accessibility and ethical considerations. As industries increasingly rely on AI for critical functions, the complexity of MoA systems could create barriers to entry for smaller businesses that may lack the resources to integrate such advanced technologies. Furthermore, the potential for bias in specialized agents must be rigorously addressed to ensure equitable outcomes. As we embrace these transformative advancements, it is essential to prioritize transparency and inclusivity in AI development, ensuring that these powerful tools serve the broader community rather than a select few.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article