Qwen Unveils Qwen3-Coder-480B-A35B-Instruct: A New Era in Open Agentic Code Models

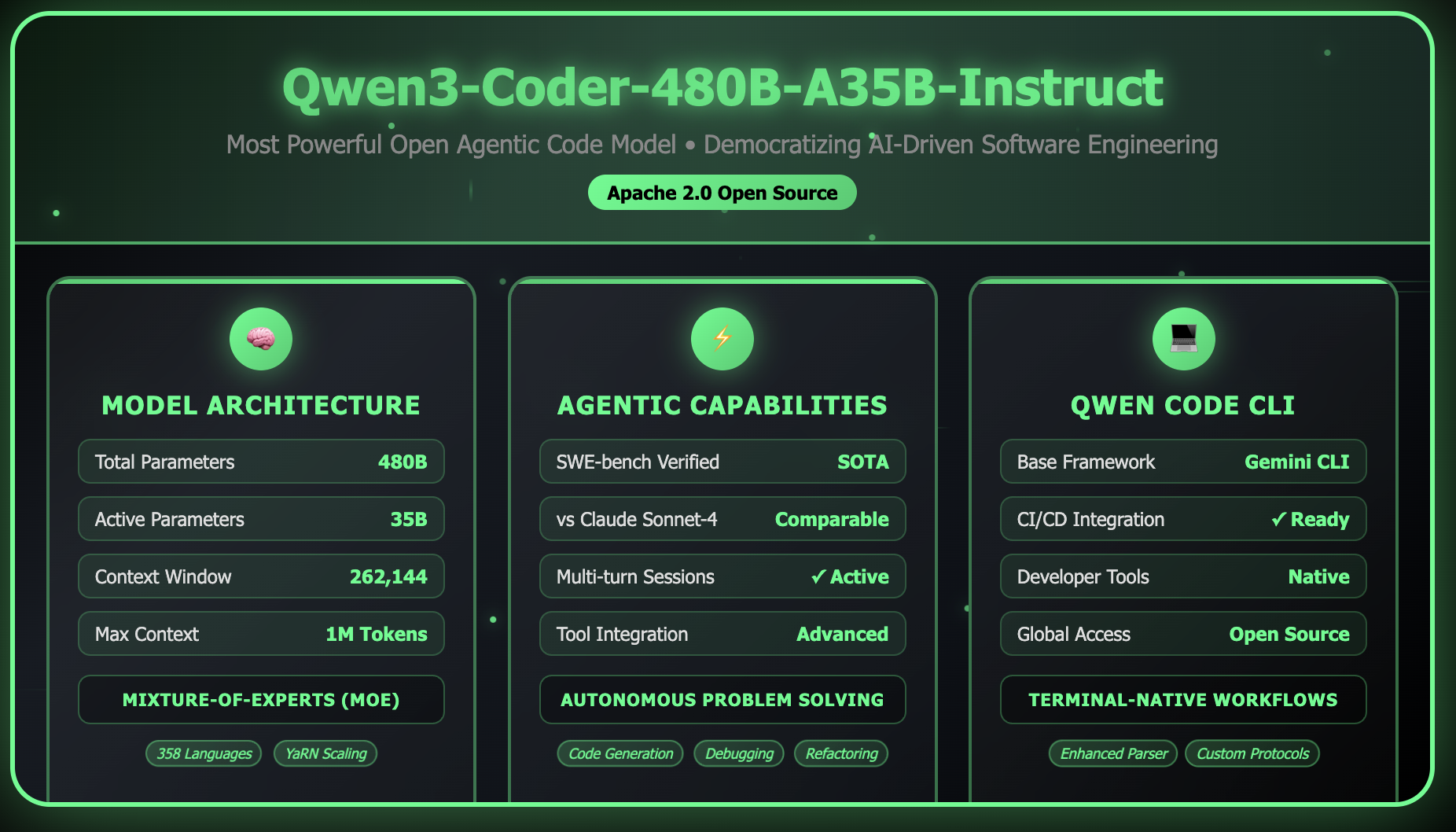

Qwen has announced the release of its latest innovation, the Qwen3-Coder-480B-A35B-Instruct, marking a significant advancement in open agentic code models. This model, touted as the most powerful to date, features a unique Mixture-of-Experts (MoE) architecture that enhances its coding capabilities and sets new benchmarks for autonomous developer assistance.

Model Architecture and Specifications

The Qwen3-Coder-480B-A35B-Instruct boasts impressive specifications that highlight its advanced design:

- Model Size: 480 billion parameters, utilizing a mixture of experts with 35 billion active parameters during inference.

- Architecture: Comprising 160 experts, with 8 activated per inference, this design maximizes efficiency and scalability.

- Layers: 62 layers that facilitate deep learning processes.

- Attention Heads: 96 for queries and 8 for key-value pairs, enhancing the model's ability to process information.

- Context Length: Supports a native context of 256,000 tokens, which can be scaled to 1,000,000 tokens through advanced context extrapolation techniques.

- Supported Languages: Compatible with a wide range of programming and markup languages, including Python, JavaScript, Java, C++, Go, and Rust, among others.

- Model Type: Available in both causal language and instruct variants, catering to diverse development needs.

Innovative Mixture-of-Experts Design

The MoE architecture allows for the activation of only a subset of the model’s parameters during inference. This approach not only delivers state-of-the-art performance but also significantly reduces computational overhead, enabling the model to scale to previously unattainable levels.

Conclusion

With the launch of Qwen3-Coder-480B-A35B-Instruct, Qwen is poised to redefine the landscape of open-source coding models. This advancement promises to enhance productivity for developers and offer more robust tools for autonomous coding assistance.

Rocket Commentary

The release of the Qwen3-Coder-480B-A35B-Instruct is a noteworthy development in the landscape of AI-driven coding assistance, underscoring the ongoing trend towards more sophisticated models. While the ambitious Mixture-of-Experts architecture promises enhanced efficiency and scalability, we must remain vigilant about the ethical implications of such power. As these advanced tools become integral to development workflows, there’s an opportunity to democratize coding by making these technologies accessible to a broader audience. However, we must also question whether the complexities of such models will inadvertently widen the gap between skilled developers and those new to the field. The industry must prioritize transparency and inclusivity to ensure these innovations serve as transformative aids rather than exclusive tools for the few.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article