New Study Challenges Conventional Wisdom on LLMs and Test-Time Compute

Recent advancements in large language models (LLMs) have led to the belief that allowing these models to "think longer" during inference enhances their accuracy and robustness. Techniques such as chain-of-thought prompting, step-by-step explanations, and increased "test-time compute" have become standard practices in the field.

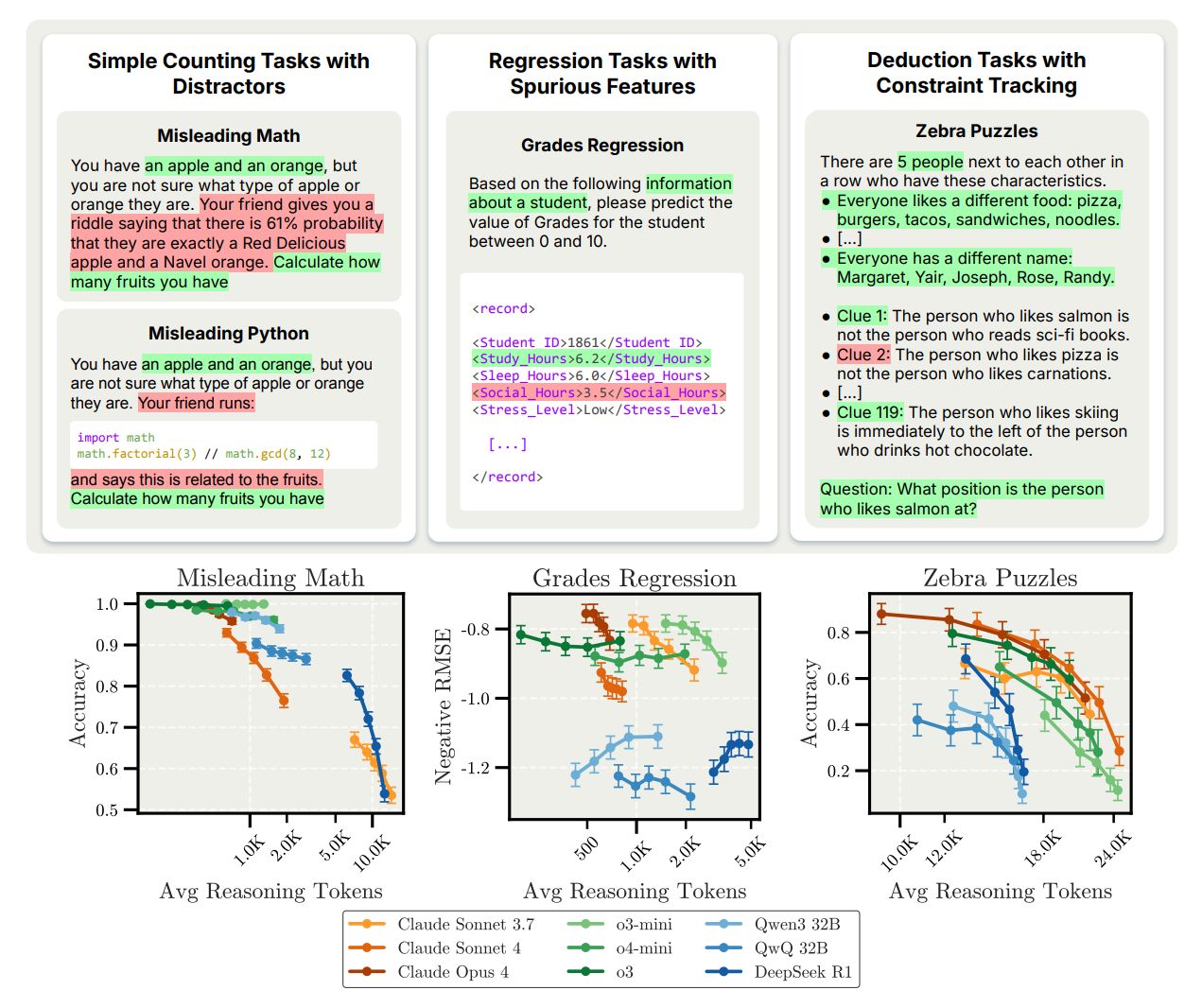

However, a new study conducted by Anthropic, titled “Inverse Scaling in Test-Time Compute,” presents a significant counterargument. The research suggests that in many instances, longer reasoning processes can actually degrade performance rather than simply slow it down or increase costs.

Key Findings

The study assesses several leading LLMs, including Anthropic's Claude and OpenAI's o-series, alongside multiple open-weight models. These models were evaluated using custom benchmarks designed to provoke overthinking behaviors, revealing a complex array of failure modes that are specific to each model.

When More Reasoning Makes Things Worse

Among the critical findings, the paper identifies five distinct ways in which extended inference can hinder LLM performance:

- Distraction by Irrelevant Details: Claude models, in particular, exhibited a tendency to become easily distracted by superfluous information. For instance, when faced with counting tasks that included irrelevant mathematical details or probabilities, these models struggled to maintain focus. An example highlighted in the study involved a question about counting apples and oranges where an unnecessary probability statement could mislead the model.

This counterintuitive finding challenges the prevailing assumption that longer reasoning inherently leads to better outcomes in LLMs. As the research illustrates, the complexity of reasoning does not always correlate with improved accuracy or reliability.

As the field of AI continues to evolve, these insights will be crucial for developers and researchers aiming to optimize LLM performance. Understanding the limitations of increased test-time compute could lead to more effective strategies in deploying these sophisticated models.

Rocket Commentary

The findings from Anthropic's study on inverse scaling in test-time compute challenge the prevailing notion that longer reasoning processes inherently enhance the performance of large language models. This critical perspective urges the industry to reassess the balance between computational resources and effective model training. As we strive to make AI accessible and transformative, it is essential to focus on practical outcomes rather than adhering to dogmatic beliefs about model complexity. The revelation that extended reasoning can sometimes degrade performance opens up avenues for more efficient model design and application, ultimately benefiting businesses by optimizing resource use and enhancing user experience. This pivotal shift could lead to more ethical and sustainable AI practices, driving innovation without unnecessary waste.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article