Meta Superintelligence Labs Unveils REFRAG: A Leap Forward in RAG Efficiency

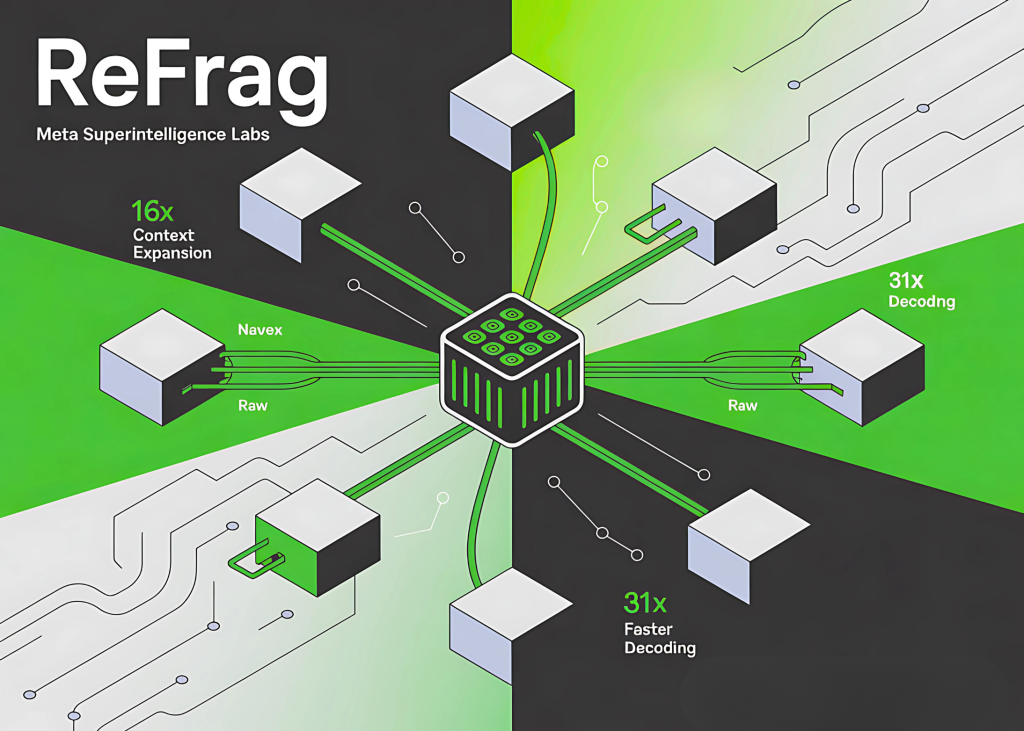

A collaborative team from Meta Superintelligence Labs, the National University of Singapore, and Rice University has introduced a groundbreaking decoding framework known as REFRAG (REpresentation For RAG). This innovative system enhances the efficiency of retrieval-augmented generation (RAG) by extending context windows for large language models (LLMs) by an impressive 16 times.

Significant Performance Improvements

In addition to its expansive context capabilities, REFRAG achieves a remarkable acceleration of up to 30.85 times in time-to-first-token (TTFT) without sacrificing accuracy. This enhancement addresses a critical bottleneck faced by LLMs concerning long context processing.

Understanding the Bottleneck of Long Contexts

The challenge with long contexts lies in the attention mechanism of large language models, which scales quadratically with input length. This means that as the length of the document increases, the computational and memory costs can grow exponentially. Specifically, if a document is twice as long, the resource demands can quadruple. This not only hinders inference speed but also complicates the management of key-value (KV) caches, making the use of large contexts impractical in real-world applications.

REFRAG's Innovative Compression Technique

To mitigate these challenges, REFRAG employs a lightweight encoder that effectively divides retrieved passages into fixed-size segments, typically comprising 16 tokens each. These segments are then compressed into dense chunk embeddings, streamlining the process of context handling.

Preserving Accuracy During Acceleration

One of the standout features of REFRAG is its ability to maintain accuracy while significantly enhancing processing speed. In RAG scenarios, many retrieved passages contribute minimally to the final output, yet traditional models incur full processing costs for all passages. REFRAG's approach allows for more efficient utilization of resources, thereby improving overall performance.

Experimental Insights

Initial experiments conducted by the research team demonstrate that REFRAG not only accelerates processing but also retains the integrity of generated responses. These findings suggest that REFRAG could lead to broader applications of LLMs in various fields, enhancing their accessibility and practicality.

Conclusion

The introduction of REFRAG marks a significant advancement in the field of artificial intelligence, particularly in optimizing the efficiency of language models. As the demand for advanced AI solutions continues to rise, innovations like REFRAG will play a crucial role in shaping the future of machine learning technologies.

Rocket Commentary

The introduction of REFRAG marks a significant step forward in the evolution of large language models by dramatically enhancing their context processing capabilities. By extending context windows and accelerating time-to-first-token performance, this innovative framework addresses a critical limitation that has hindered the practical deployment of LLMs in complex applications. However, as we celebrate these advancements, it is crucial to remain vigilant about the ethical implications of such technologies. The ability to process longer contexts could lead to more powerful applications, but it also raises concerns about misinformation and bias being magnified in this expanded capacity. The industry must prioritize transparency and responsible usage to ensure that these tools are accessible and transformative for all stakeholders, not just a select few. Embracing a balanced approach will be essential as we navigate the potential of AI to reshape industries and enhance human experiences.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article