Mastering Speech Enhancement and ASR with Python and SpeechBrain

In a recent tutorial by Asif Razzaq published on MarkTechPost, readers are introduced to an advanced yet practical workflow for building a Speech Enhancement and Automatic Speech Recognition (ASR) pipeline using the SpeechBrain library.

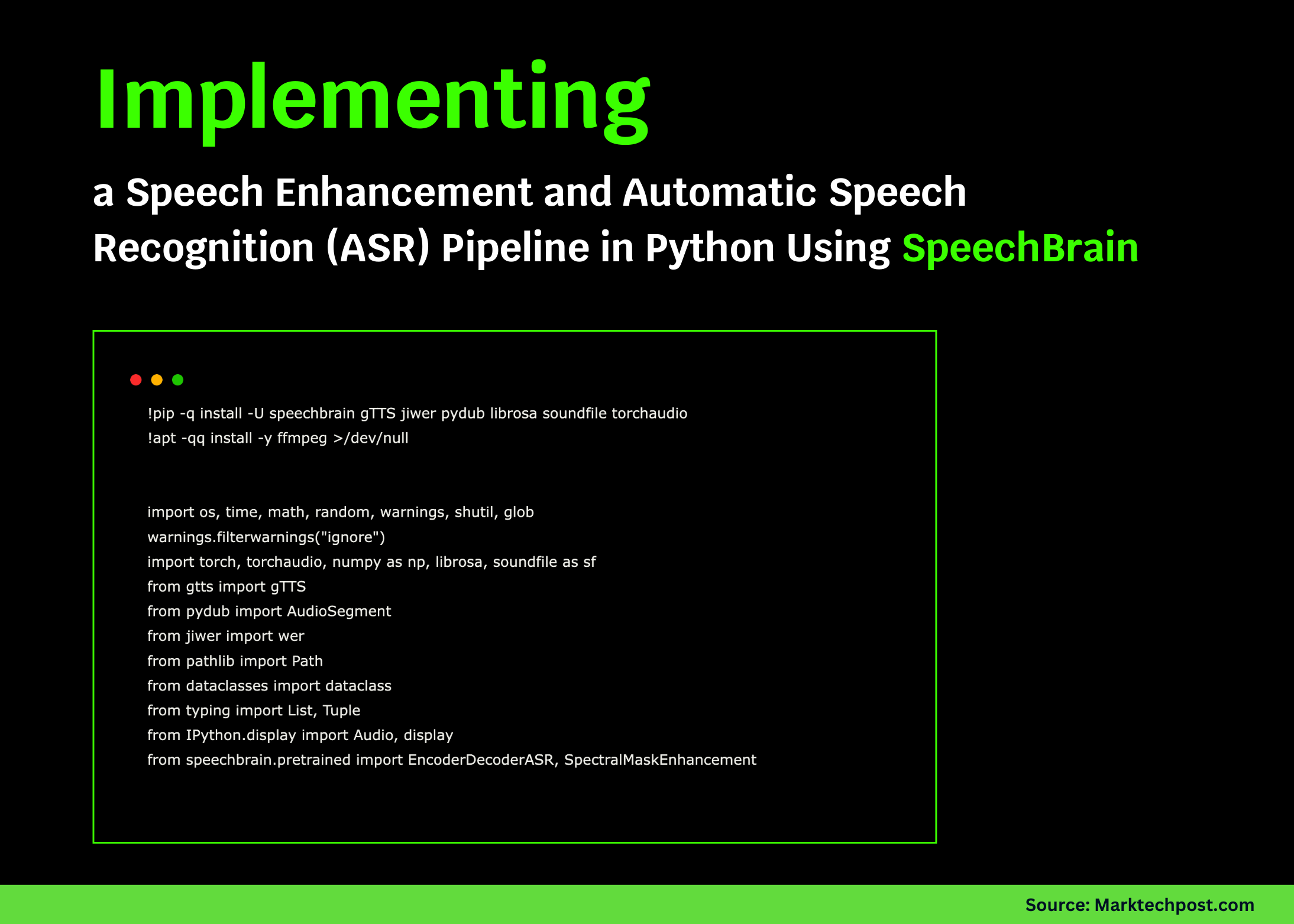

Step-by-Step Workflow

The tutorial begins with the generation of clean speech samples utilizing gTTS, a text-to-speech conversion library. To simulate real-world scenarios, noise is deliberately added to the audio samples. This step is crucial for understanding how environmental factors can impact speech recognition performance.

Once the audio is contaminated with noise, the tutorial guides users through the application of SpeechBrain's MetricGAN+ model, which enhances the quality of the audio by denoising it. This innovative model showcases the capabilities of deep learning techniques in improving audio clarity.

Automatic Speech Recognition

After the audio has been enhanced, the next step involves running automatic speech recognition through a language model–rescored CRDNN system. The effectiveness of the speech enhancement is measured by comparing word error rates before and after the enhancement process. This quantitative analysis allows users to gauge the improvement in accuracy, providing a clear demonstration of SpeechBrain's utility.

Conclusion

This tutorial not only emphasizes the simplicity of implementing a complete pipeline for speech enhancement and recognition but also encourages users to explore the underlying code. By taking a hands-on approach, professionals can gain valuable insights into the practical applications of machine learning in audio processing.

Asif Razzaq's tutorial is an excellent resource for anyone looking to deepen their understanding of speech technologies and their applications in real-world settings.

Rocket Commentary

The tutorial by Asif Razzaq on utilizing the SpeechBrain library for Speech Enhancement and ASR highlights a crucial intersection of AI and real-world application: the need for robust systems that can operate effectively in noisy environments. While the process of generating clean speech samples and introducing noise serves to mimic everyday challenges, it also underscores a significant opportunity for businesses reliant on voice technologies. The MetricGAN+ model demonstrates promising advancements in audio denoising, yet we must remain vigilant about the ethical implications of deploying such technology. As AI becomes increasingly integrated into communication tools, ensuring accessibility and equitable access to these enhancements will be paramount. By focusing on practical applications that enhance user experiences while remaining ethically grounded, the industry can truly transform how we interact with technology.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article