Introducing Memp: Revolutionizing Procedural Memory in LLM-Based Agents

As the capabilities of large language model (LLM) agents expand, they are increasingly tasked with complex operations, including web research, report generation, data analysis, and intricate software workflows. Despite these advancements, LLM agents face significant challenges in procedural memory management. This limitation often leads to rigid, manually designed memory structures that are embedded in model weights, rendering them vulnerable to unexpected disruptions such as network failures or user interface changes.

The Challenge of Procedural Memory

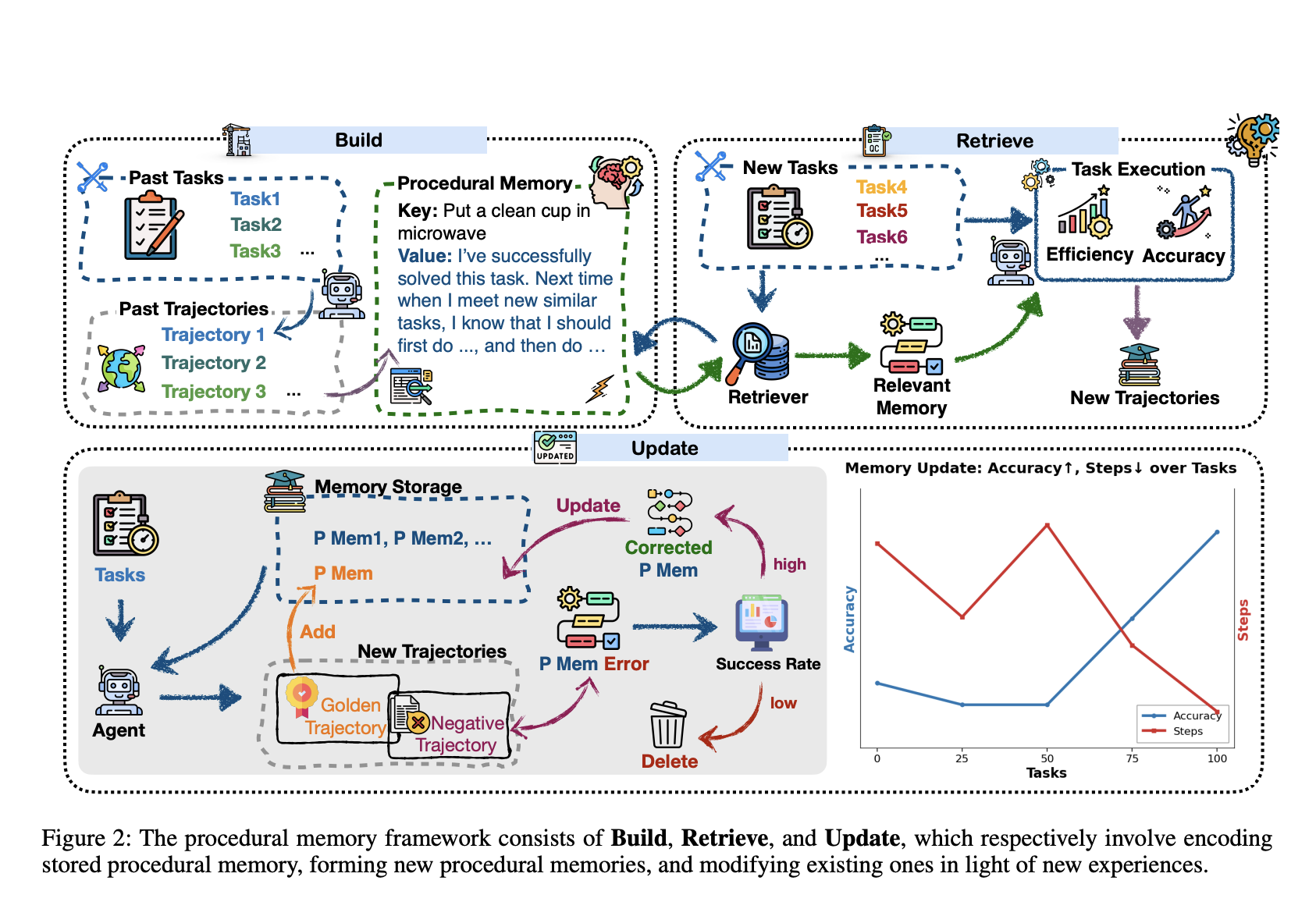

Unlike humans, who adeptly learn by reusing past experiences, current LLM agents lack a systematic framework for building, refining, and reusing procedural skills. Existing methodologies provide some abstraction but leave a critical gap in optimizing memory life-cycles.

Understanding Memory in Language Agents

Memory is essential for LLM agents, facilitating the recall of past interactions across various contexts—short-term, episodic, and long-term. While current systems utilize techniques like vector embeddings, semantic search, and hierarchical structures for information storage and retrieval, managing procedural memory remains particularly challenging. Procedural memory enables agents to internalize and automate repetitive tasks, yet the strategies for constructing, updating, and reusing this memory type are still underexplored.

Advancements Through Memp

The introduction of Memp—a task-agnostic framework—aims to elevate procedural memory to a core optimization target within LLM-based agents. By enhancing the way these agents internalize routines, Memp seeks to address the inefficiencies and challenges associated with existing memory management systems. As LLM agents continue to evolve, integrating robust, flexible memory capabilities will be crucial for improving their resilience and performance.

Research in this area highlights that while agents traditionally learn from experience through methods such as reinforcement learning and imitation, they often encounter low efficiency, poor generalization, and issues related to forgetting. Thus, a refined approach to procedural memory could significantly enhance the operational effectiveness of these intelligent systems.

Rocket Commentary

The article highlights the inherent limitations of current large language models in managing procedural memory, presenting a critical challenge as these systems increasingly engage in complex tasks. This gap underscores the necessity for a more sophisticated memory framework that enables LLMs to learn and adapt like humans. As AI continues to transform business processes, addressing these memory management issues will be paramount. Companies must prioritize ethical and accessible AI development that not only enhances operational efficiency but also fosters innovation. Ultimately, bridging this gap could unlock transformative potential, making AI even more valuable in driving sustainable growth and decision-making.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article