Huawei Unveils CloudMatrix: A Revolutionary AI Datacenter Architecture

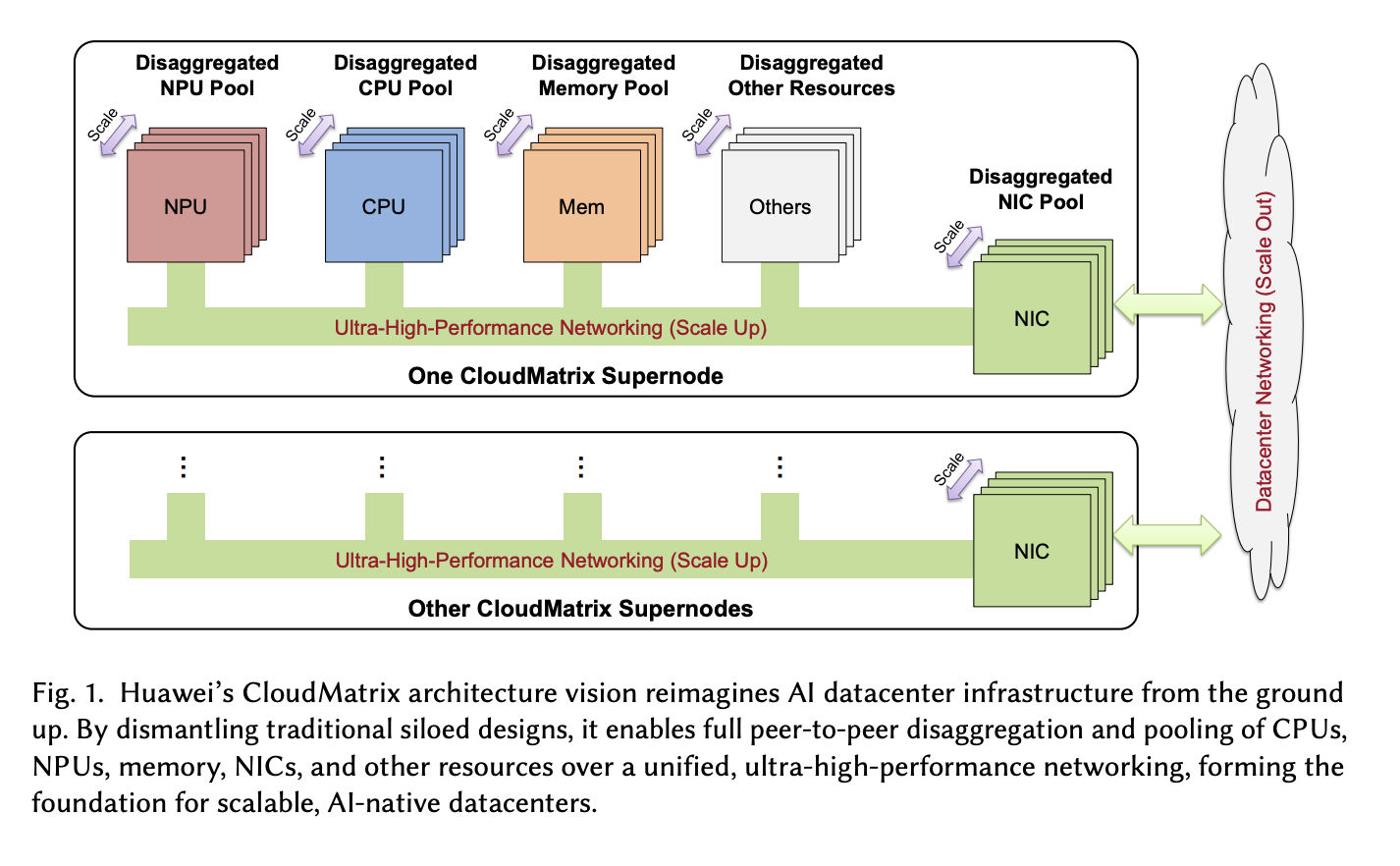

Huawei has introduced CloudMatrix, a pioneering peer-to-peer AI datacenter architecture designed to enhance the scalability and efficiency of large language model (LLM) serving. This innovative framework addresses the challenges posed by the rapid advancements in LLMs, which now boast parameter counts in the trillions.

Understanding the Challenges

Recent developments in LLMs have included models such as DeepSeek-R1, LLaMA-4, and Qwen-3, all requiring significant computational resources, memory bandwidth, and swift inter-chip communication. The integration of mixture-of-experts (MoE) designs has enhanced efficiency but has also introduced complications in expert routing. Furthermore, the growing context windows, which can exceed a million tokens, place additional strain on attention mechanisms and key-value cache storage, particularly as the number of concurrent users increases.

Real-World Implications

In practical applications, the unpredictable nature of inputs, uneven expert activations, and bursty queries further complicate the serving of these advanced models. To effectively tackle these pressures, a comprehensive reevaluation of AI infrastructure is essential. This includes a focus on hardware-software co-design, adaptive orchestration, and elastic resource management.

Key Trends Driving Innovation

The evolution of LLMs is influenced by three primary trends:

- Escalating Parameter Counts: Models are now reaching trillions of parameters, increasing their computational demands.

- Sparse MoE Architectures: These designs allow only a subset of experts to be activated per token, balancing efficiency with the necessity for capacity.

- Extended Context Windows: Context lengths have expanded significantly, enabling long-form reasoning while simultaneously straining compute and memory resources.

As AI technologies advance, datacenters must adapt to these escalating demands to ensure efficient and effective deployment of LLMs.

Rocket Commentary

Huawei's introduction of CloudMatrix represents a significant step forward in addressing the scalability challenges associated with large language models (LLMs). However, while the peer-to-peer architecture promises enhanced efficiency, we must remain cautious about the implications of such advancements. The complexity introduced by mixture-of-experts (MoE) designs and the burgeoning context windows could lead to accessibility barriers for smaller enterprises. As AI continues to evolve, it is crucial that innovations like CloudMatrix not only push the boundaries of computational capability but also prioritize ethical use and democratization. The industry must ensure that these powerful technologies serve as catalysts for transformation rather than creating further divides.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article