Harnessing GPU Power: Building Advanced LangChain Workflows with Ollama

In a recent tutorial by Asif Razzaq published on MarkTechPost, a comprehensive guide is provided on how to construct a GPU-capable local Large Language Model (LLM) stack that integrates Ollama and LangChain. This tutorial is particularly beneficial for professionals looking to enhance their AI workflows.

Creating the LLM Stack

The process begins with the installation of necessary libraries and the launch of the Ollama server. Users will pull a model and wrap it in a custom LangChain LLM. This setup allows for precise control over various parameters such as temperature, token limits, and context, which are crucial for optimizing performance in AI applications.

Incorporating Retrieval-Augmented Generation

One of the standout features of this setup is the addition of a Retrieval-Augmented Generation (RAG) layer. This layer is designed to effectively ingest PDFs or text, which are then processed into manageable chunks. These chunks are embedded using Sentence-Transformers, enabling the system to serve grounded answers based on the ingested material.

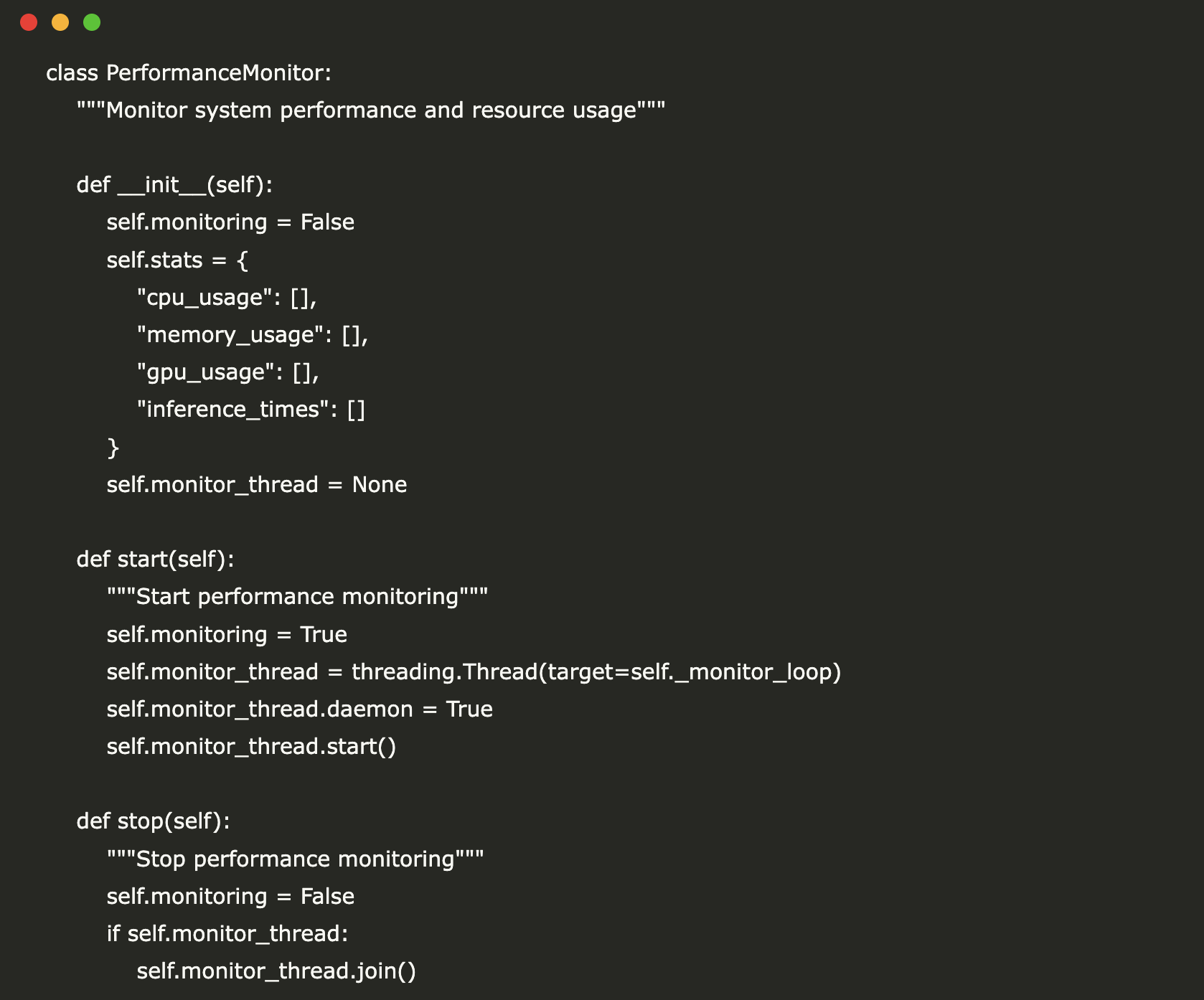

Multi-Session Chat Performance Monitoring

Furthermore, the tutorial addresses the management of multi-session chat memory, a critical aspect for applications requiring contextual awareness across multiple interactions. Users can register tools such as web search and RAG queries, and establish an agent capable of reasoning about when to utilize these tools, enhancing the overall efficiency and responsiveness of the chat system.

Conclusion

This tutorial not only demonstrates the technical implementation of a GPU-accelerated Ollama LangChain workflow but also provides valuable insights into optimizing AI interactions. As organizations continue to adopt AI technologies, resources like these are essential for staying competitive in the rapidly evolving landscape of artificial intelligence.

Rocket Commentary

Asif Razzaq's tutorial presents an important step towards democratizing access to powerful AI tools by providing a clear pathway to creating a GPU-capable local LLM stack with Ollama and LangChain. This development is particularly promising for professionals aiming to refine AI workflows, as it underscores the significance of customization in AI applications. However, as we embrace these advancements, we must also prioritize ethical considerations and accessibility. The potential for misuse in AI technologies calls for robust guidelines to ensure that innovations like Retrieval-Augmented Generation are harnessed responsibly. By fostering an environment that encourages transparency and ethical usage, we can ensure that AI remains a transformative force in business and development, driving meaningful change rather than exacerbating existing disparities.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article