Google AI Unveils ReasoningBank: A Framework for Self-Evolving LLM Agents

In a significant advancement for artificial intelligence, Google Research has introduced ReasoningBank, an innovative framework designed to enhance the learning capabilities of large language model (LLM) agents. This framework allows agents to learn from their own experiences—both successes and failures—without the need for retraining.

Understanding ReasoningBank

ReasoningBank transforms an agent's interaction traces into reusable, high-level reasoning strategies. These strategies serve as guidance for future decision-making, creating a self-evolving loop that improves the agent's performance over time. This evolution is further enhanced by a technique known as memory-aware test-time scaling (MaTTS), which has demonstrated impressive results.

Performance Gains

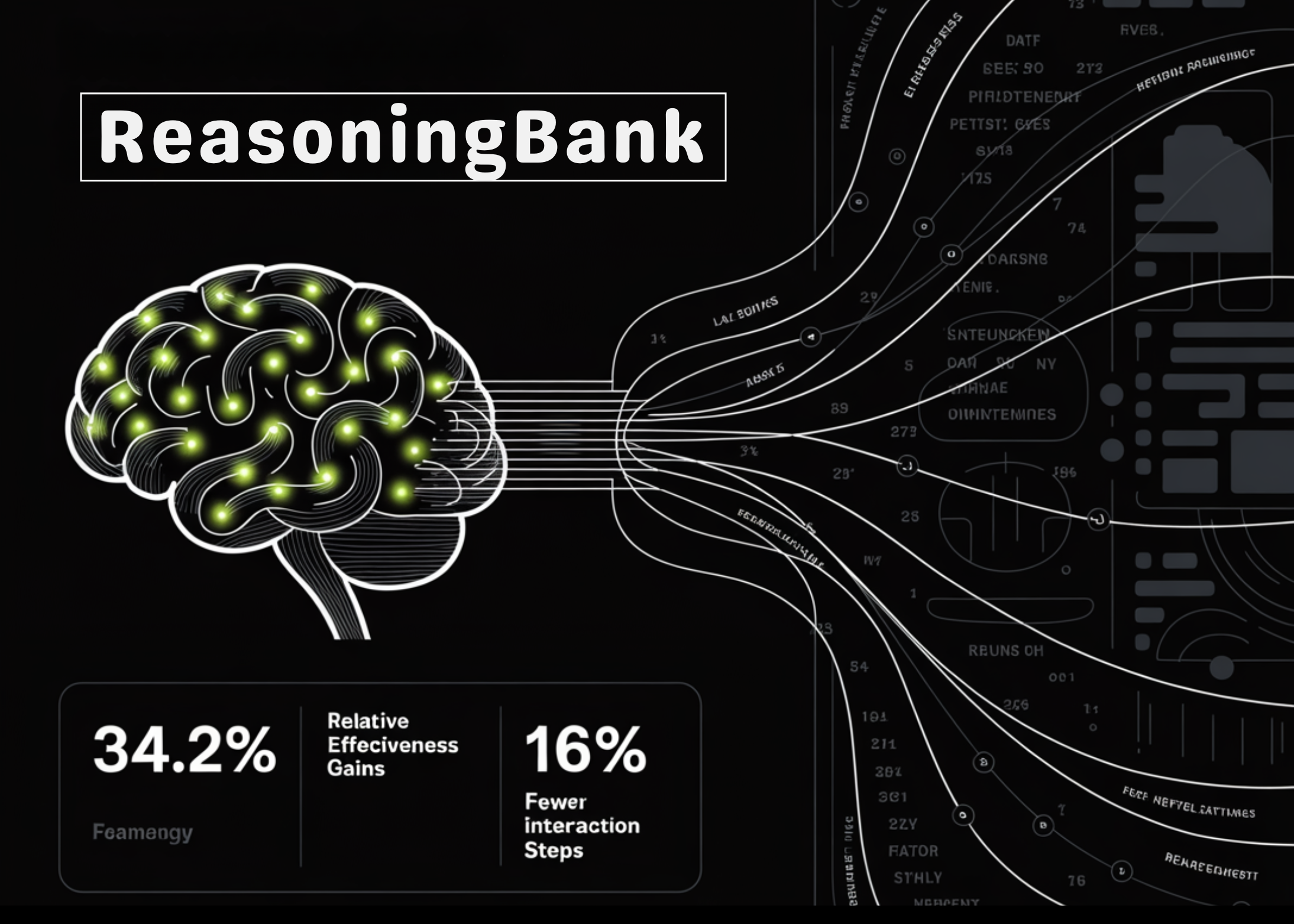

According to Google Research, the integration of ReasoningBank and MaTTS yields up to a 34.2% relative increase in effectiveness, coupled with a 16% reduction in interaction steps when tested across various benchmarks in web and software engineering. This is a notable improvement over previous memory systems that primarily relied on storing raw trajectories or success-only workflows.

Addressing Limitations of Conventional Memory

Traditional memory systems in LLM agents often struggle to accumulate and leverage experiential knowledge effectively. They tend to hoard raw logs or create rigid workflows that lack flexibility and adaptability. Many of these systems overlook valuable insights derived from failures, where substantial actionable knowledge resides.

ReasoningBank reimagines memory as a collection of compact, human-readable strategy items. This reformatting makes the knowledge more transferable across different tasks and domains, enhancing the overall utility of LLM agents.

Conclusion

The introduction of ReasoningBank marks a pivotal moment in the evolution of AI agents, enabling them to learn dynamically from their experiences and adapt more effectively to a variety of tasks. As this technology progresses, it holds the potential to significantly elevate the capabilities of AI systems in practical applications.

Rocket Commentary

The introduction of ReasoningBank by Google Research marks a pivotal moment in the evolution of large language models (LLMs). By enabling agents to learn autonomously from their experiences, this framework not only enhances their decision-making capabilities but also raises important questions about the ethical implications of self-evolving AI systems. While the promise of adaptive learning is enticing, we must ensure that these advancements prioritize accessibility and responsible use in business contexts. As organizations increasingly rely on AI, it is crucial that frameworks like ReasoningBank are developed with transparency and fairness in mind, ultimately fostering a transformative impact that benefits all stakeholders rather than a select few.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article