Google AI Unveils Guardrailed-AMIE: A New Paradigm for Medical Accountability

In a significant advancement for the field of medical artificial intelligence, Google AI has introduced the Guardrailed-AMIE (g-AMIE), a multi-agent architecture designed to enhance accountability in conversational medical AI systems. This innovative approach is built on the Gemini 2.0 Flash framework and is a collaboration between Google DeepMind, Google Research, and Harvard Medical School.

The Need for Oversight

As large language model (LLM)-powered diagnostic AI agents become increasingly proficient in delivering high-quality clinical dialogue, differential diagnosis, and management planning within simulated environments, the necessity for regulatory oversight remains paramount. Individual diagnoses and treatment recommendations are strictly governed, with only licensed clinicians authorized to make such critical patient-facing decisions.

Traditional healthcare models utilize a hierarchical oversight structure where experienced physicians review and approve diagnostic and management plans proposed by advanced practice providers (APPs), including nurse practitioners (NPs) and physician assistants (PAs). This framework emphasizes the importance of safety protocols, which must also be mirrored in the deployment of medical AI.

Guardrailed-AMIE: System Design

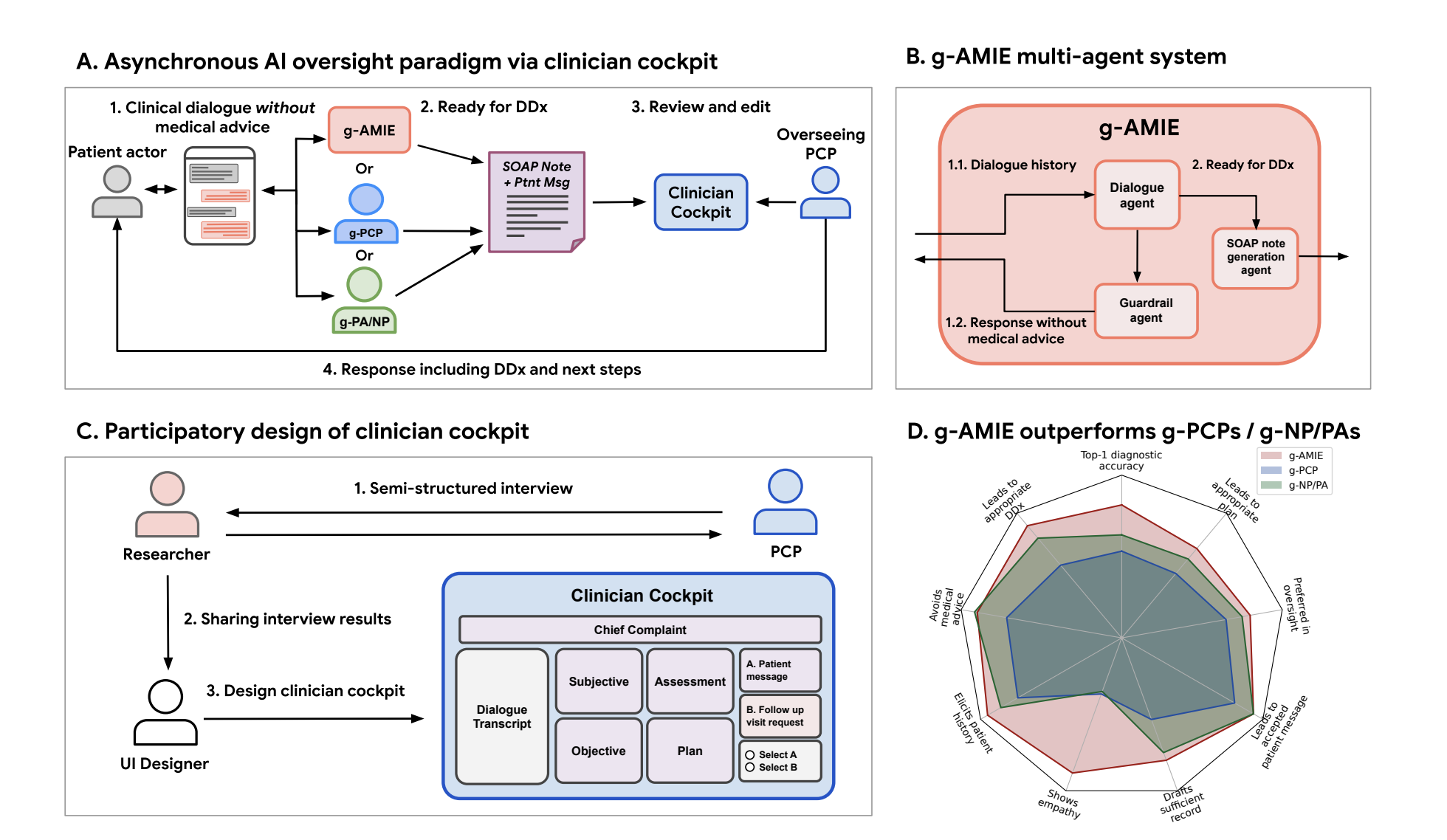

The g-AMIE system is structured to maintain a clear separation between patient history intake and the delivery of personalized medical advice. This model incorporates:

- Intake with Guardrails: The AI engages in dialogues to gather patient history and document symptoms without issuing any direct diagnoses or management recommendations.

- Asynchronous Oversight: By establishing a multi-agent approach, g-AMIE allows for a more dynamic interaction between AI agents and human clinicians, ensuring that critical decisions remain within the purview of licensed professionals.

Implications for the Future

As the integration of AI into healthcare continues to evolve, systems like g-AMIE represent a crucial step toward ensuring that technology can enhance clinical workflows while adhering to stringent safety and regulatory standards. The collaborative efforts between leading research institutions showcase the commitment to developing responsible and effective AI solutions for the medical field.

According to the team behind g-AMIE, this architecture aims to redefine how medical AI can function effectively within the established frameworks of healthcare, ultimately improving patient outcomes while maintaining rigorous accountability.

Rocket Commentary

The introduction of Guardrailed-AMIE (g-AMIE) by Google AI marks a pivotal step towards ensuring accountability in conversational medical AI systems. While the article highlights the impressive capabilities of this multi-agent architecture in enhancing clinical dialogue, it also underscores a critical need for regulatory oversight in the deployment of such technologies. The balance between innovation and ethical responsibility is crucial; as AI systems gain proficiency in diagnostics, the potential for misuse or misinterpretation increases. Companies must prioritize transparency and rigorous testing to build trust with both practitioners and patients. This development not only represents an opportunity for improved healthcare delivery but also calls for a collaborative framework that ensures AI remains an ethical partner in patient care, ultimately transforming the industry for the better.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article