Generative AI: A Closer Look at Its Limitations in Understanding the World

In a recent reflection on the capabilities of generative AI, Gary Marcus highlights significant failures in large language models (LLMs) to create robust and interpretable models of reality. This issue, as discussed in Apple's Illusion of Thinking paper, reveals a deeper problem within the field of artificial intelligence.

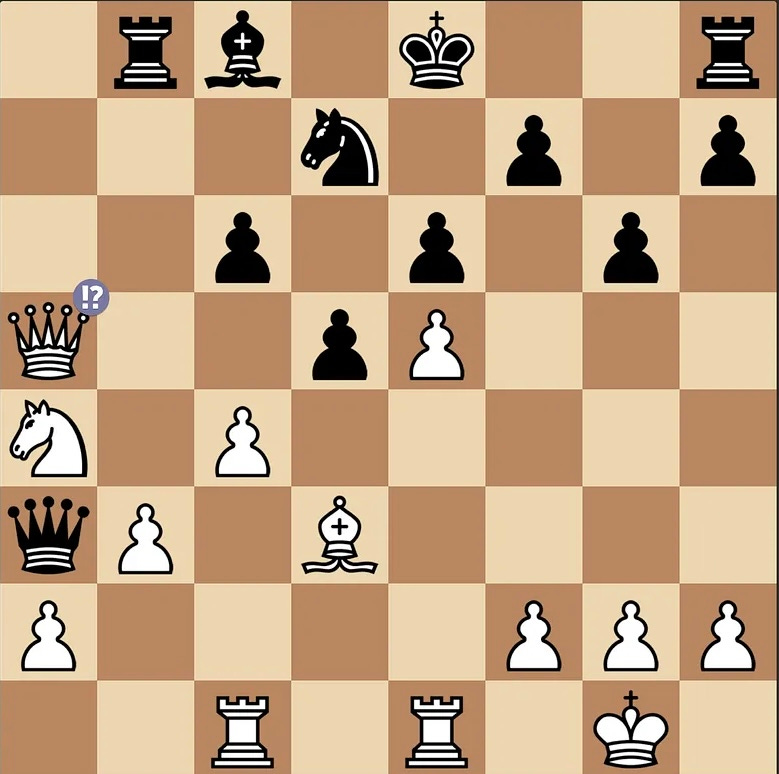

The Chess Analogy

Marcus uses a thought-provoking example involving a synthesized video of two men playing chess. In this scenario, the black player makes an illegal move by shifting his opponent's pawn horizontally across the board—a clear indication of the model's failure to understand the rules and dynamics of the game. This incident serves as a microcosm for the broader challenges faced by LLMs.

A Conversation with Garry Kasparov

Recently, Marcus had the honor of recording a podcast with Garry Kasparov, a legendary chess player and insightful thinker. Their discussion not only covered chess but also delved into the implications of AI in our understanding of complex systems. Marcus expressed his hope that more people would heed Kasparov's warnings regarding global issues, particularly in relation to geopolitical dynamics.

Core Issues with LLMs

According to Marcus, the inability of LLMs to build and maintain coherent, dynamically updated models of the world poses significant limitations. These systems often struggle to reason effectively and adapt to new information, leading to incomplete or misguided conclusions. This deficiency highlights the pressing need for advancements in AI that prioritize a deeper understanding of context and dynamic reasoning.

The Path Forward

As the field of AI continues to evolve, it is crucial for researchers and developers to address these shortcomings. By focusing on developing models that can accurately interpret and adapt to the complexities of the world, the industry can move closer to realizing the full potential of generative AI.

In summary, Marcus's insights underscore the importance of critical evaluation of AI technologies and the necessity for ongoing innovation to overcome established limitations.

Rocket Commentary

Gary Marcus’s critique of large language models (LLMs) underscores a critical gap in the AI landscape: the distinction between surface-level interactions and genuine comprehension. The chess analogy starkly illustrates this disconnect, raising alarms about the reliability of AI in contexts requiring strict adherence to rules and logic. As we stand on the brink of transformative AI applications, it’s paramount that developers prioritize ethical and interpretable models that not only perform tasks but also understand the underlying principles of those tasks. This is not just a technical challenge but a call to enhance AI's accessibility and reliability for businesses looking to leverage its capabilities responsibly. Bridging this gap could redefine user trust and expand AI's practical impact across industries.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article