Exploring the LLM Arena-as-a-Judge Approach for Evaluating AI Outputs

In the evolving landscape of artificial intelligence, the need for effective evaluation methods for large language model (LLM) outputs has become paramount. A new approach, known as the LLM Arena-as-a-Judge, offers a promising solution by facilitating head-to-head comparisons rather than relying solely on isolated numerical scores. This innovative method enables users to define criteria for evaluation, such as helpfulness, clarity, or tone, enhancing the overall assessment process.

Understanding the Methodology

The LLM Arena-as-a-Judge approach allows for a dynamic evaluation of AI-generated responses. Instead of assigning a score to each response, this method pits outputs against each other to identify the superior one based on pre-defined qualitative metrics.

Implementation Steps

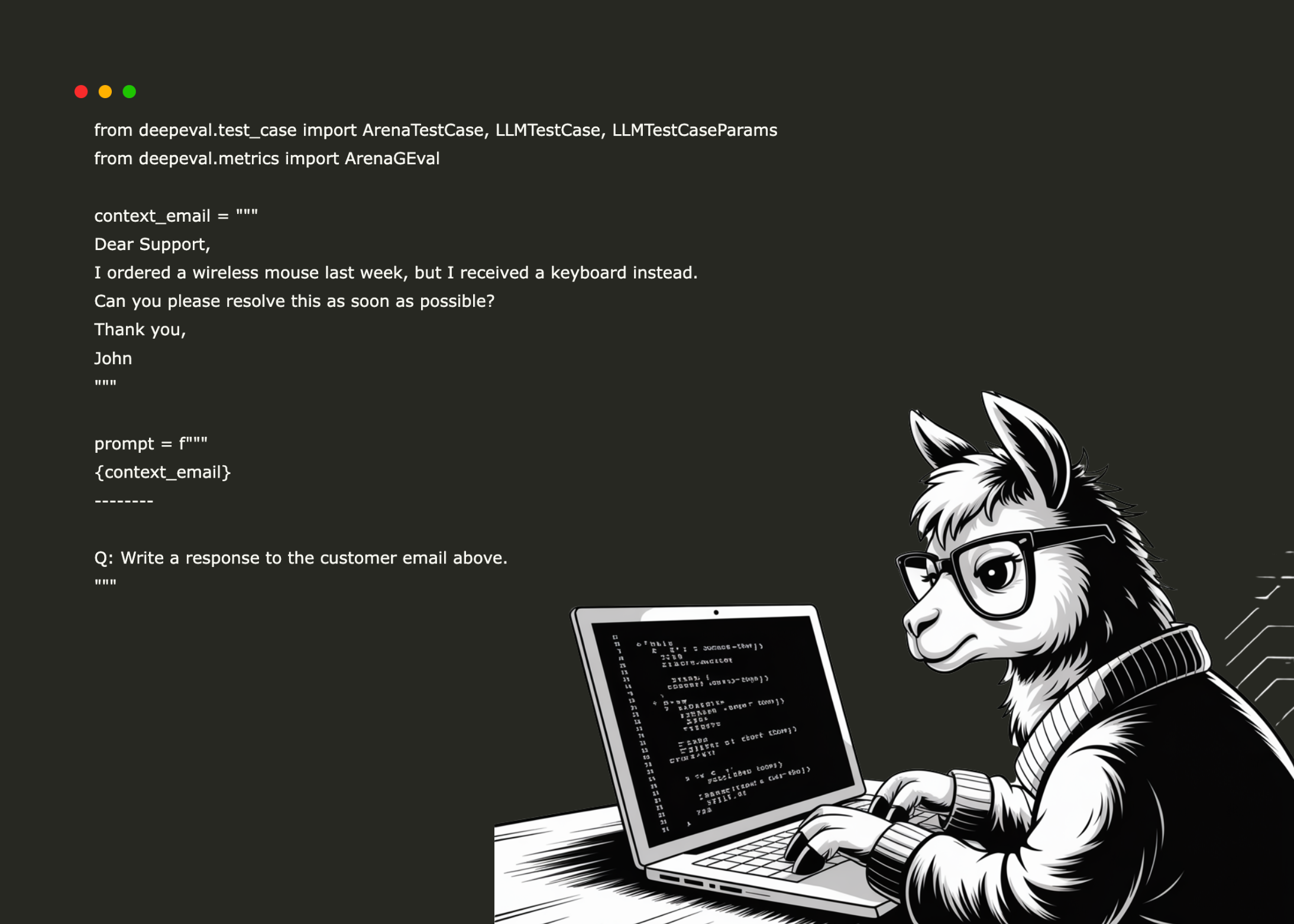

To demonstrate the effectiveness of this method, the tutorial by Arham Islam outlines the use of OpenAI’s GPT-4.1 and Gemini 2.5 Pro to generate responses, with GPT-5 serving as the judge for evaluation. A practical example involves a customer support scenario:

Dear Support, I ordered a wireless mouse last week, but I received a keyboard instead. Can you please resolve this as soon as possible? Thank you, John.

Technical Requirements

For those interested in implementing this approach, several technical steps are necessary:

- Installation of Dependencies: Users must install relevant packages, including deepeval, google-genai, and openai.

- API Keys: Access to both OpenAI and Google APIs is required. Users can generate their API keys through designated platforms.

As AI continues to evolve, methods like the LLM Arena-as-a-Judge are crucial for ensuring that outputs meet the high standards expected in various applications. This approach not only streamlines the evaluation process but also fosters a more nuanced understanding of AI capabilities.

Rocket Commentary

The introduction of the LLM Arena-as-a-Judge methodology marks a significant shift in how we assess AI-generated outputs, moving beyond simplistic numerical evaluations to a more nuanced, qualitative approach. This innovation not only addresses the shortcomings of traditional metrics but also aligns with the pressing need for transparency and relevance in AI applications. As businesses increasingly rely on LLMs for decision-making and customer interaction, the ability to evaluate responses based on criteria like helpfulness and clarity could enhance user trust and satisfaction. However, it is crucial that the implementation of such methodologies is coupled with ethical considerations to avoid biases in evaluation. As we embrace these transformative technologies, we must ensure they remain accessible and beneficial across industries, fostering an environment where AI can genuinely enhance human capabilities.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article