Exploring Native RAG vs. Agentic RAG in AI Decision-Making

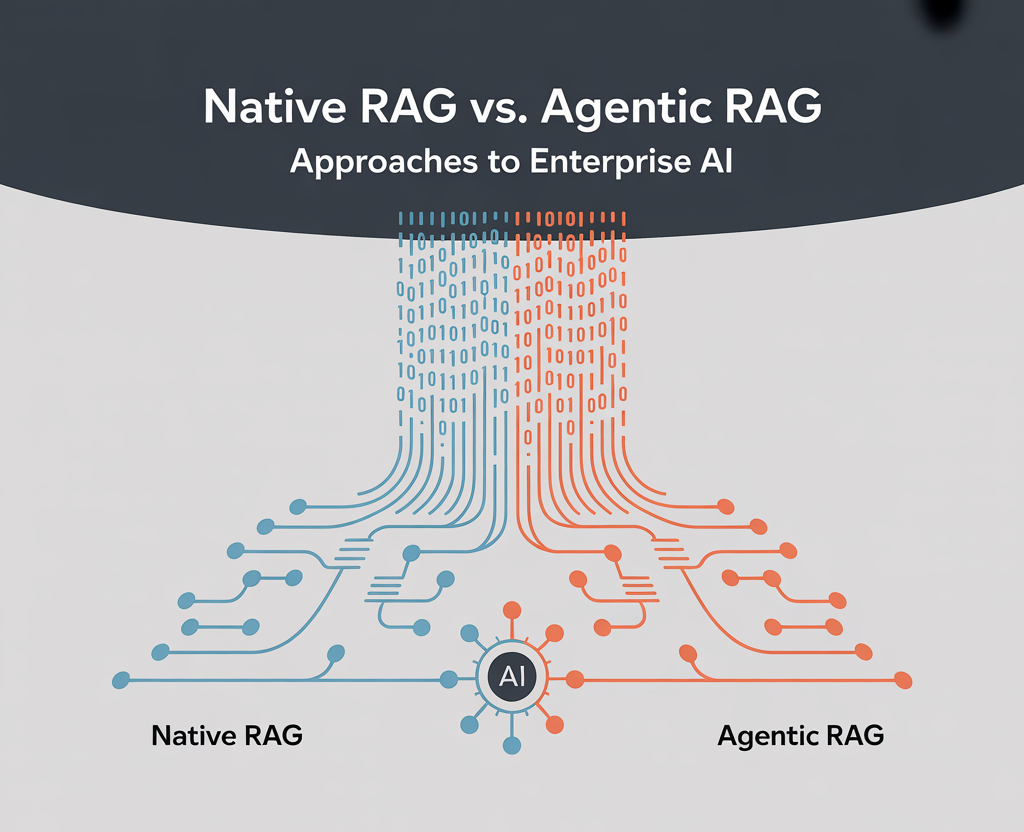

Retrieval-Augmented Generation (RAG) is rapidly becoming a fundamental technique in enhancing Large Language Models (LLMs) with real-time, domain-specific insights. As the landscape of AI continues to evolve, two primary approaches have emerged: Native RAG and the newer Agentic RAG, each offering unique capabilities for information synthesis and decision support.

Understanding Native RAG

The Native RAG approach serves as the standard pipeline for many organizations. It combines retrieval and generation methods to provide accurate and relevant answers to complex queries. The typical architecture of a Native RAG pipeline includes several key stages:

- Query Processing & Embedding: User questions are often reformulated, embedded into a vector representation using an LLM or dedicated embedding model, and prepared for semantic search.

- Retrieval: The system conducts a search within a vector database or document store, identifying the most relevant chunks based on similarity metrics, including cosine, Euclidean, or dot product.

- Reranking: Retrieved results are then reranked according to relevance, recency, domain-specificity, or user preferences, utilizing models that may range from rule-based to advanced machine learning systems.

- Synthesis & Generation: Finally, the LLM synthesizes the reranked information to generate a coherent, context-aware response tailored to the user's needs.

The Rise of Agentic RAG

In contrast, the Agentic RAG paradigm is redefining the possibilities within AI-powered information synthesis. While still in its formative stages, Agentic RAG aims to enhance decision-making processes by incorporating a more interactive and autonomous approach to information retrieval and response generation.

According to insights shared by Michal Sutter, the evolution towards Agentic RAG signifies a shift towards more dynamic systems that not only respond to queries but also learn and adapt based on user interactions, ultimately leading to more informed decision-making in enterprise environments.

Conclusion

As organizations strive to leverage AI for enhanced decision-making capabilities, understanding the differences between Native RAG and Agentic RAG becomes crucial. Companies must assess which approach aligns best with their operational needs and strategic goals to effectively harness the power of AI.

Rocket Commentary

The emergence of Retrieval-Augmented Generation (RAG) marks a pivotal moment in the evolution of AI, particularly in enhancing Large Language Models (LLMs) with timely, domain-specific data. While the article outlines the structured efficiency of Native RAG, it is essential to critically assess the implications of Agentic RAG, which promises to elevate decision-making capabilities. However, as organizations increasingly adopt these technologies, we must prioritize ethical considerations and ensure that such advancements are accessible to all stakeholders. The challenge lies not just in refining the technology but in fostering an ecosystem where AI can be a transformative force across industries, enhancing productivity while safeguarding against biases and inequities. The real opportunity lies in harnessing RAG not only for efficiency but also for fostering innovation in a responsible manner.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article