Exploring Context Engineering: Lessons from the Manus Project for AI Agents

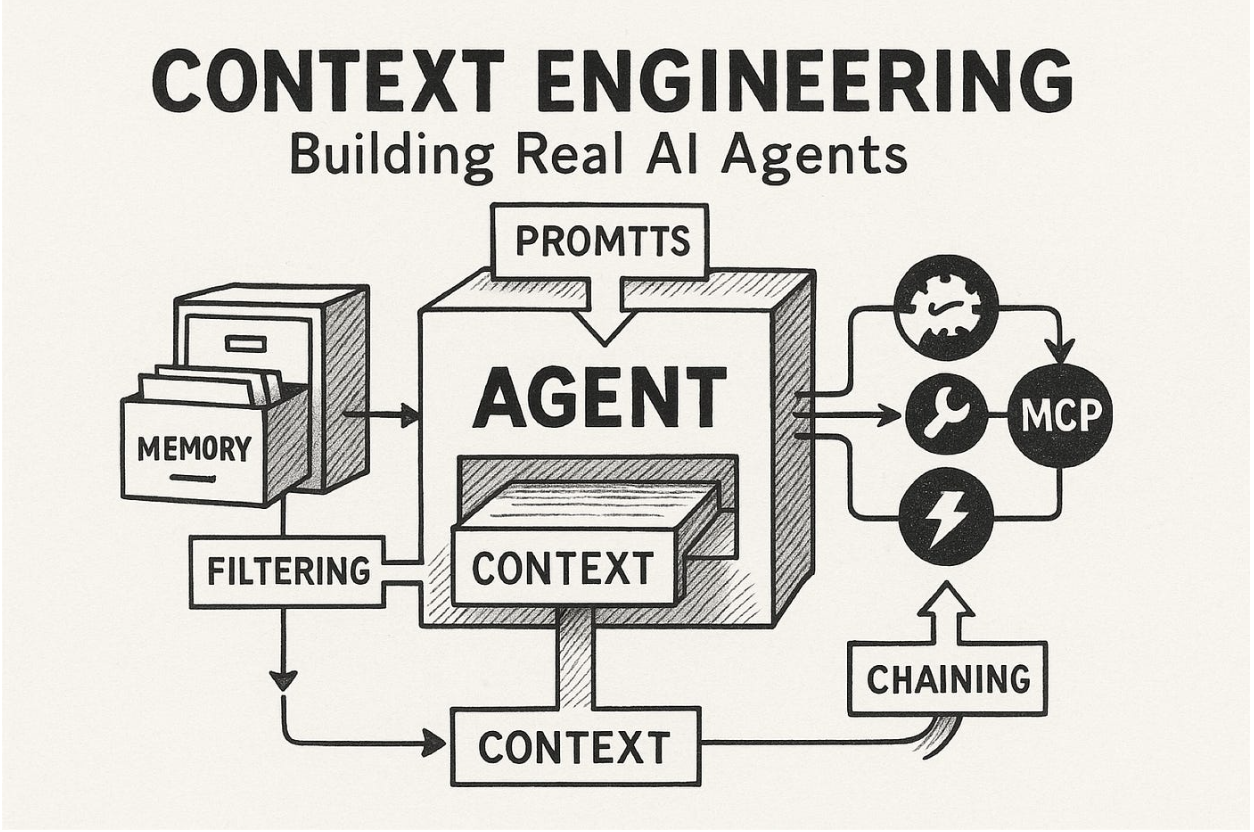

As artificial intelligence continues to evolve, the effectiveness of AI agents relies heavily on more than just powerful language models. Insights from the Manus project highlight the critical role of context engineering in shaping how AI agents make decisions and process information. This approach significantly influences an agent's speed, cost, reliability, and overall intelligence.

Key Insights from Manus

The Manus project initially aimed to utilize the in-context learning capabilities of advanced models, opting for rapid adaptability over traditional, slower fine-tuning methods. This shift allowed for significant improvements and quicker updates, enabling the team to implement changes within hours rather than weeks.

However, the journey was not without its challenges. The Manus team encountered various obstacles, leading to several framework rebuilds, a process humorously termed “Stochastic Graduate Descent,” which reflects their experimental and iterative approach to refining their methods.

Critical Lessons for Context Engineering

- Design Around the KV-Cache: The KV-cache plays a pivotal role in the performance of AI agents. It directly impacts latency and operational costs. By continuously appending actions and observations, agents can create longer input sequences than outputs, which necessitates efficient cache management to optimize processing time and costs.

- Stable Prompt Prefixes: Maintaining consistent prompt prefixes is essential, as even minor changes can lead to cache invalidation. Avoiding dynamic components in prompts is recommended to ensure the effectiveness of the KV-cache.

These lessons underscore the importance of meticulous design and management of context to enhance AI agent performance. As the field of AI matures, understanding the mechanics of context engineering will be crucial for developers and organizations looking to harness the full potential of AI technologies.

Rocket Commentary

The Manus project illustrates a pivotal shift in AI development, emphasizing context engineering as essential for enhancing AI agent performance. This approach challenges the conventional reliance on fine-tuning, showcasing how rapid adaptability can yield faster and more efficient results. However, the implications extend beyond mere speed; they raise critical questions about the transparency and ethical use of such adaptive AI systems. As businesses increasingly integrate these technologies, the focus must remain on ensuring accessibility and ethical standards. This balance is crucial to harness AI's transformative potential while safeguarding against unforeseen biases and ensuring reliability in decision-making processes.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article