Enhancing Transformer Training Efficiency with DeepSpeed Techniques

In the rapidly evolving field of artificial intelligence, optimizing the training of large language models is crucial. A recent tutorial by Asif Razzaq on DeepSpeed presents an in-depth, hands-on approach to implementing advanced optimization techniques that significantly enhance the efficiency of training transformer models.

Key Techniques Overview

The tutorial focuses on several cutting-edge methods, including:

- ZeRO Optimization: This technique helps in maximizing GPU memory utilization, allowing for larger model training without exceeding hardware limitations.

- Mixed-Precision Training: By utilizing lower precision for computations, this method reduces memory consumption and speeds up the training process.

- Gradient Accumulation: This approach allows for effective use of smaller batch sizes, which is especially beneficial in resource-constrained environments.

- Advanced DeepSpeed Configurations: Custom configurations tailored to specific model architectures enhance performance metrics.

Comprehensive Learning Experience

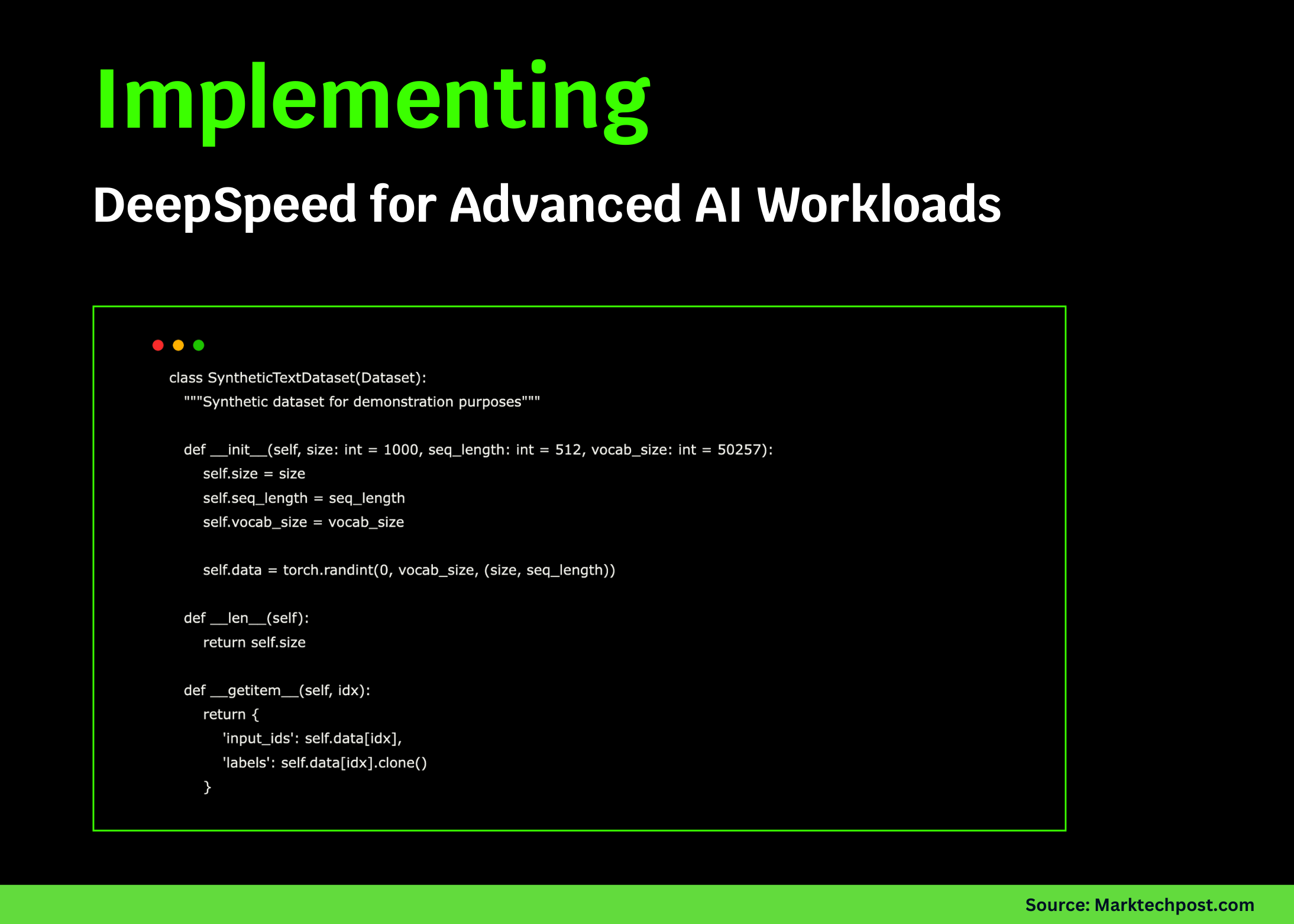

The tutorial provides not only theoretical insights but also practical coding examples designed to help practitioners implement these techniques. It covers essential aspects of:

- Model creation and training

- Performance monitoring

- Inference optimization

- Checkpointing for model recovery

- Benchmarking different stages of ZeRO optimization

These elements are critical for developers looking to accelerate their model development workflows while ensuring high efficiency and performance.

Conclusion

As the demand for advanced AI applications continues to grow, mastering these techniques will be essential for professionals in the field. The tutorial by Razzaq serves as a valuable resource for those aiming to stay at the forefront of AI development and optimize their machine learning projects effectively.

Rocket Commentary

The tutorial by Asif Razzaq on DeepSpeed presents a promising advancement in the optimization of large language models, particularly through techniques like ZeRO Optimization and Mixed-Precision Training. While these innovations significantly enhance training efficiency, we must remain vigilant about their accessibility. As AI continues to evolve, ensuring that these advanced methods are available to a broader range of developers—not just those with extensive resources—is crucial. This democratization of technology will enable more ethical and transformative applications of AI, ultimately fostering a landscape where innovation drives meaningful business outcomes and societal benefits. The industry stands at a crossroads where responsible deployment of such tools can redefine productivity and creativity in AI development.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article