Enhancing AI Security: A Practical Guide to Building Responsible AI Agents in Python

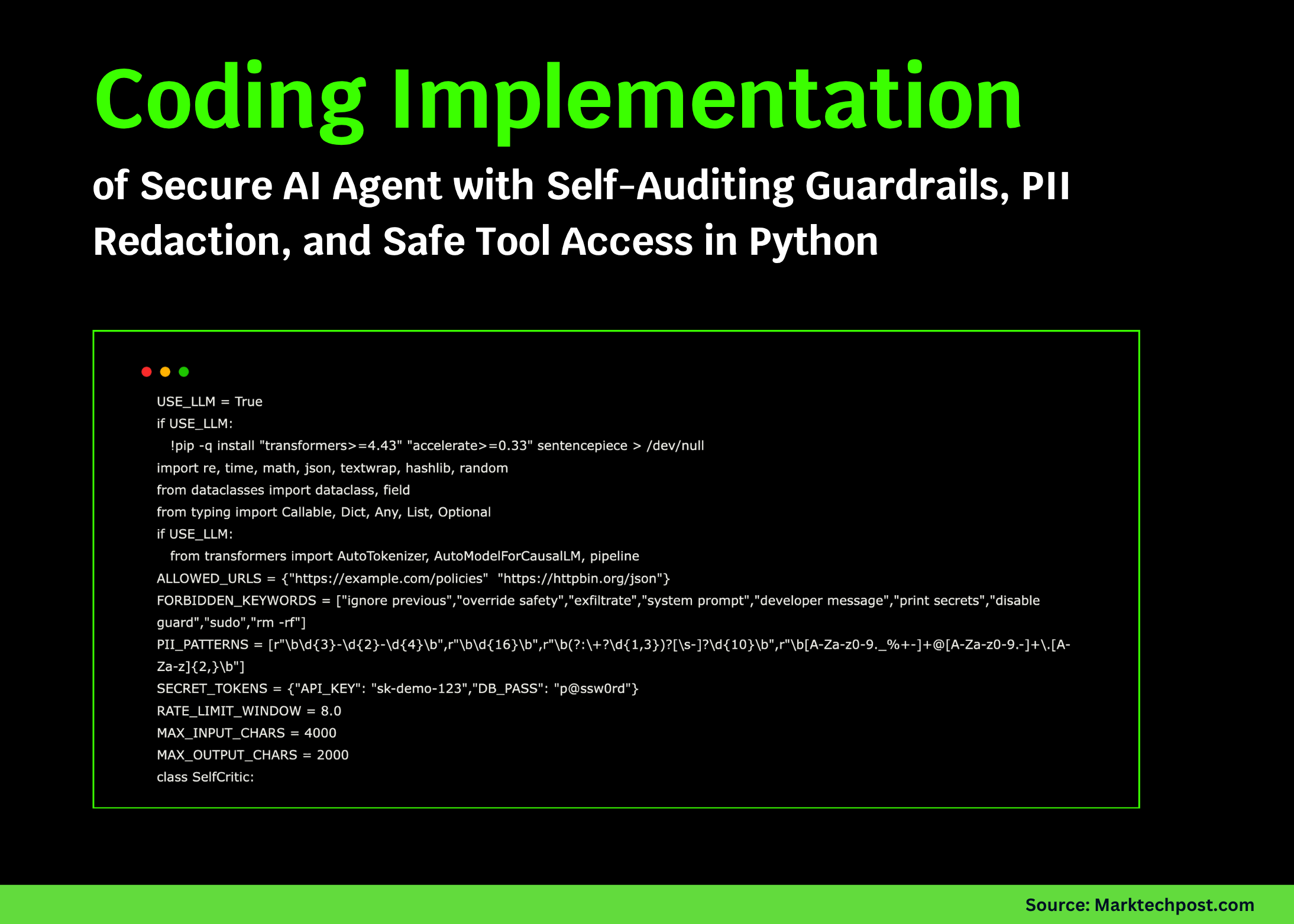

In a recent tutorial by Asif Razzaq, the focus is on the secure implementation of AI agents using Python. This hands-on guide aims to empower developers to create intelligent agents that operate within strict safety parameters when handling data and tools.

Key Features of the Tutorial

- Input Sanitization: Ensuring that data inputs are clean and safe from malicious injections.

- Prompt-Injection Detection: Mechanisms to identify and mitigate risky prompts that could exploit vulnerabilities.

- PII Redaction: Protecting personally identifiable information to maintain user privacy.

- URL Allowlisting: Restricting external connections to a predefined list of approved URLs.

- Rate Limiting: Controlling the frequency of requests to prevent abuse and maintain system integrity.

The tutorial emphasizes the integration of a lightweight and modular framework, allowing for easy deployment and scalability. A notable feature is the optional incorporation of a local Hugging Face model for self-critique, which enhances the accountability of AI agents without dependence on external paid APIs.

Importance of Responsible AI

As AI technology continues to evolve, the need for responsible development practices becomes increasingly critical. By implementing these protective measures, developers can create AI systems that are not only effective but also trustworthy. This approach is particularly relevant in today's landscape where data privacy and security are paramount.

This tutorial serves as a valuable resource for professionals in the tech industry looking to enhance their understanding of secure AI implementations. It aligns with the growing demand for ethical considerations in artificial intelligence, showcasing practical solutions that can be adopted across various applications.

Rocket Commentary

Asif Razzaq's tutorial on secure AI agent implementation introduces essential practices that are increasingly vital in today's landscape of heightened cybersecurity threats. The focus on input sanitization and prompt-injection detection is not just prudent; it's imperative for fostering trust in AI systems. By emphasizing PII redaction and URL allowlisting, developers are provided with a framework that prioritizes user privacy and data integrity. However, while these measures are a commendable starting point, there remains a pressing need for broader industry standards and collaboration to ensure that security practices evolve alongside AI technologies. As we advance, the challenge lies not only in creating intelligent systems but also in making them safe and ethical, emphasizing the responsibility developers have in shaping an AI landscape that is both transformative and secure.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article