Dynamic Fine-Tuning: Enhancing Generalization in Supervised Learning for LLMs

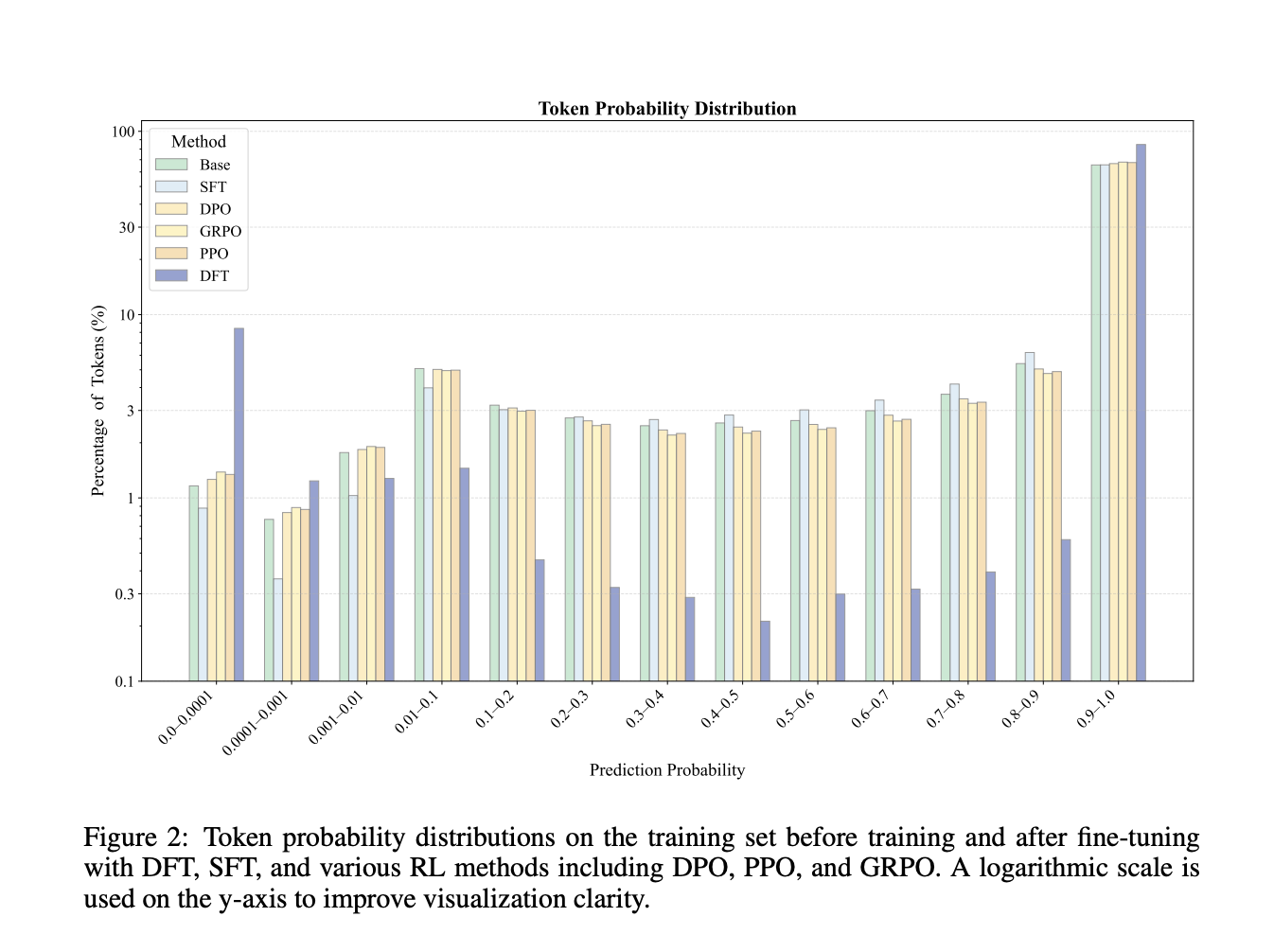

In the realm of artificial intelligence, particularly in adapting Large Language Models (LLMs) to new tasks, Supervised Fine-Tuning (SFT) has emerged as a widely utilized technique. This method involves training models on expert demonstration datasets, allowing for a rapid development of expert-like behavior. Despite its advantages, SFT often struggles with generalization compared to Reinforcement Learning (RL), which enables models to explore a wider array of strategies, resulting in improved generalization.

However, the application of RL is often hindered by its demand for substantial computational resources, meticulous hyperparameter tuning, and the necessity for reward signals, which may not always be accessible. This raises an essential question: Can SFT be fundamentally improved to address these limitations, especially in scenarios where datasets lack negative samples or when reward models are absent?

Exploring Hybrid Approaches

Existing strategies to mitigate the challenges posed by SFT and RL have led to various hybrid methodologies. One prevalent approach combines an initial SFT phase followed by RL refinement, exemplified by models such as InstructGPT. Other innovative methods include interleaving SFT and RL steps and Direct Preference Optimization (DPO), which seeks to efficiently integrate imitation and reinforcement signals.

New Techniques in Fine-Tuning

Further advancements in this domain have introduced techniques like Negative-aware Fine-Tuning (NFT), which empowers models to self-improve by learning from incorrect outputs. Theoretical explorations are also underway, aiming to unify SFT and RL by conceptualizing SFT as a reward-weighted or implicit reinforcement learning approach. Nonetheless, these theories often fall short of establishing a comprehensive framework for effectively bridging the generalization gap.

As the field continues to evolve, the dialogue surrounding the enhancement of SFT remains critical, particularly for practitioners seeking to optimize LLM performance across diverse applications.

Rocket Commentary

The exploration of Supervised Fine-Tuning (SFT) and its limitations compared to Reinforcement Learning (RL) highlights a critical crossroads in AI development. While SFT offers a pathway to quickly emulate expert behavior, its struggles with generalization signal a need for innovation. The industry's reliance on RL, despite its resource demands, suggests that a balance must be struck between efficiency and adaptability. As businesses increasingly integrate AI, the challenge lies in making these advanced techniques not only powerful but also accessible and ethically sound. Advancing SFT while ensuring it can generalize effectively could democratize AI capabilities, ultimately fostering transformative applications that benefit a wider array of users and industries. The imperative is clear: we must pursue methodologies that enhance AI's practical impact without compromising on accessibility and ethical standards.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article