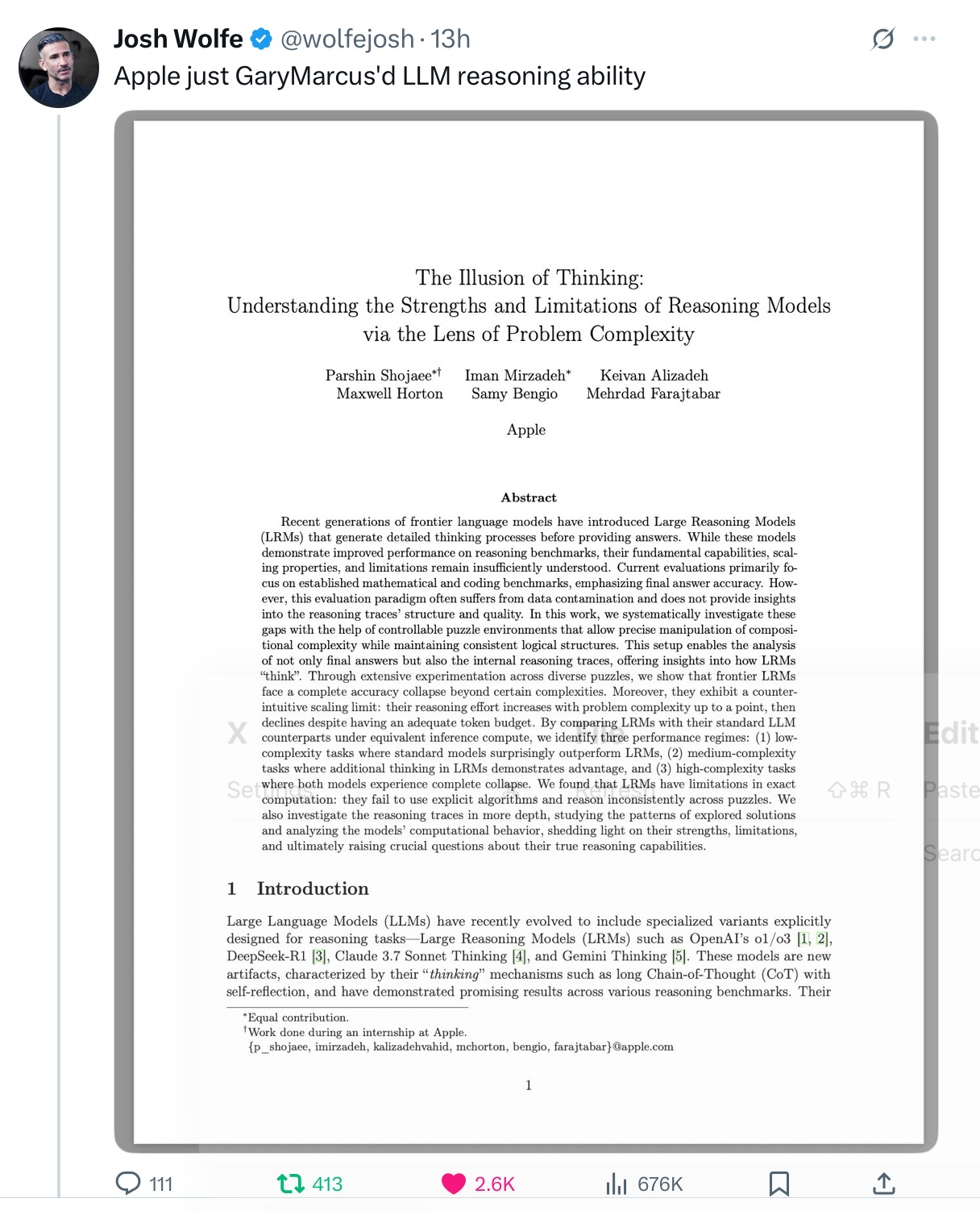

Apple's Latest Paper Raises Serious Concerns for LLMs

In a recent discussion, venture capitalist Josh Wolfe of Lux Capital highlighted a significant new paper from Apple that casts doubt on the capabilities of large language models (LLMs). Wolfe's commentary suggests that the findings could represent a critical challenge for LLM proponents.

Key Insights from the Paper

According to Wolfe, the paper serves as a powerful follow-up to previous work by the same authors, presenting robust arguments against the reasoning abilities of LLMs. He notes that while there is an interesting weakness in the argument, the overall impact is formidable enough to prompt advocates of LLMs to acknowledge the shortcomings while still hoping for future advancements.

The Argument Against LLMs

The core of the paper echoes a long-standing critique regarding the training distribution of neural networks. Wolfe references his own work, which dates back to 1998, emphasizing that neural networks can effectively generalize within the data they are trained on. However, this generalization often fails when faced with data outside that training distribution.

- Historical Context: Wolfe points out that similar arguments were made in his earlier publications, which showcased the limitations of multilayer perceptrons, the precursors to current LLMs.

As the conversation around LLMs evolves, this new research introduces essential questions about the future of AI and its application in various fields.

Looking Ahead

Despite the challenges posed by Apple's findings, LLM supporters are suggested to remain optimistic about the technology's potential. The ongoing dialogue around these models will likely shape future research directions and innovations within the field of artificial intelligence.

Rocket Commentary

Josh Wolfe's insights on Apple's recent paper challenge the prevailing narrative surrounding large language models (LLMs), highlighting essential shortcomings in their reasoning abilities. This critical stance invites a necessary reevaluation of LLM capabilities, urging proponents to recognize the technology's limitations while still aspiring for future innovations. As we navigate this complex landscape, it is crucial to ensure that AI remains accessible, ethical, and transformative. By addressing these challenges head-on, we can foster an environment where LLMs evolve responsibly, ultimately enhancing their practical impact on business and development. The dialogue sparked by Wolfe's commentary may serve as a catalyst for more rigorous scrutiny and meaningful advancements in AI technology.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article